40 ODD: Data Transformations and Tukey’s Ladder of Powers

This optional deep dive introduces data transformations, focusing on Tukey’s Ladder of Powers: a simple family of power transforms that can make distributions easier to work with and relationships easier to model. The examples here follow Fox (2016) (ch. 4, pp. 28 - 80)

40.1 Why transform?

Transformations are often used to make a relationship closer to linear, reduce curvature, and stabilize variance. The classic example is a log transform, but Tukey’s ladder gives a small family of related choices to try.

40.1.1 Transforming Data: Tukey’s Ladder of Powers

John Tukey introduced a methodological toolkit, likened to a set of drill bits of varying sizes, for modifying the shapes of distributions and relationships between variables. This section delves into these transformations, particularly focusing on the mathematical formulations that underpin this approach.

40.1.2 Dataset Preparation and Visualization

We’ll be using data from Fox (2016)’s book. You may need to download the data manually and save it in the “data” directory within your current working directory. The dataset is available here. You can use this r code to download the data.

download.file("http://socserv.socsci.mcmaster.ca/jfox/Books/Applied-Regression-3E/datasets/UnitedNations.txt", "data/UnitedNations.txt")#if (!require("p3d")) install.packages("p3d", repos = "http://R-Forge.R-project.org")

#library(p3d)

#library(extras)

library(car)

library(latticeExtra)

library(gridExtra)

#if (!require("spida2")) devtools::install_github("smasongarrison/spida2",ref="patch-1")

library(spida2)

# read data

un <- read.table("data/UnitedNations.txt", header = TRUE) %>%

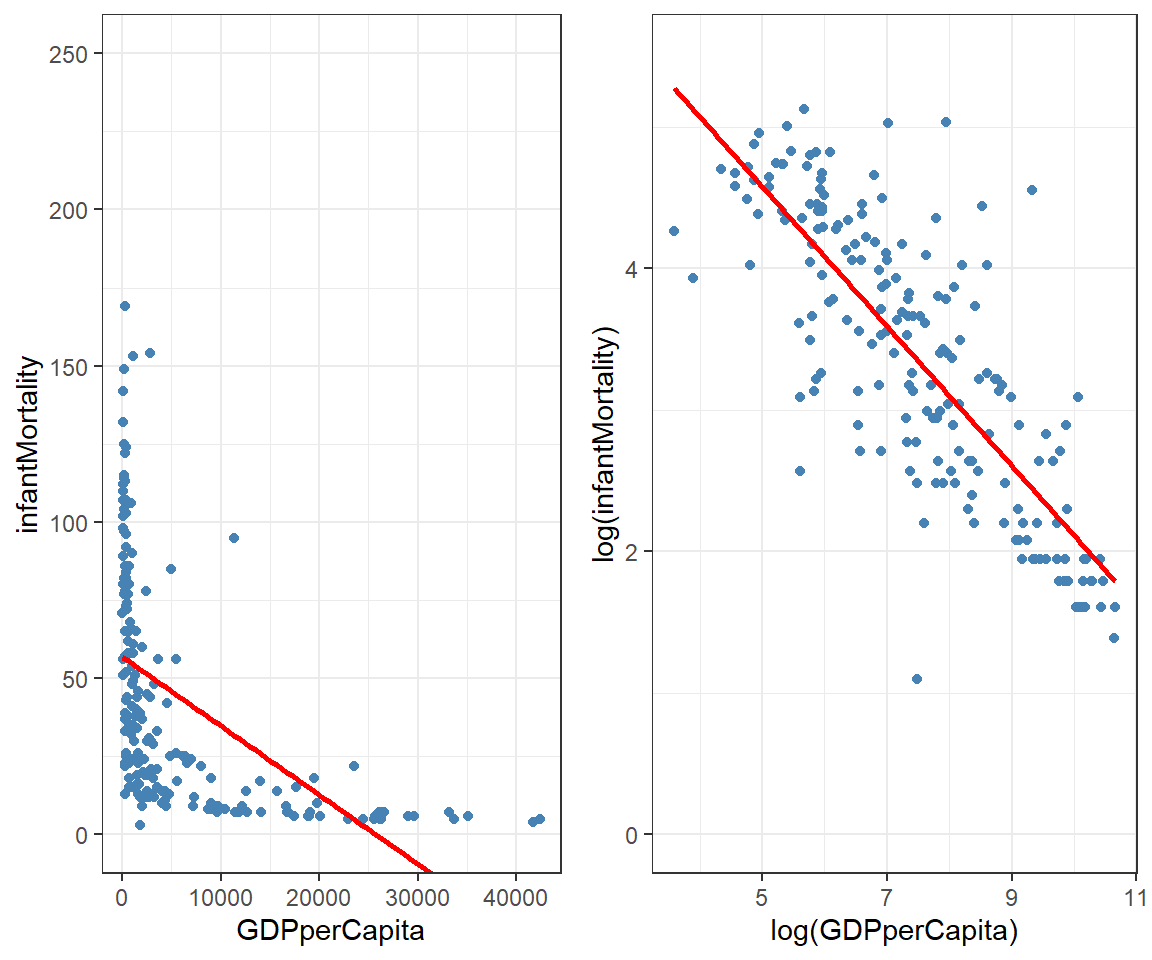

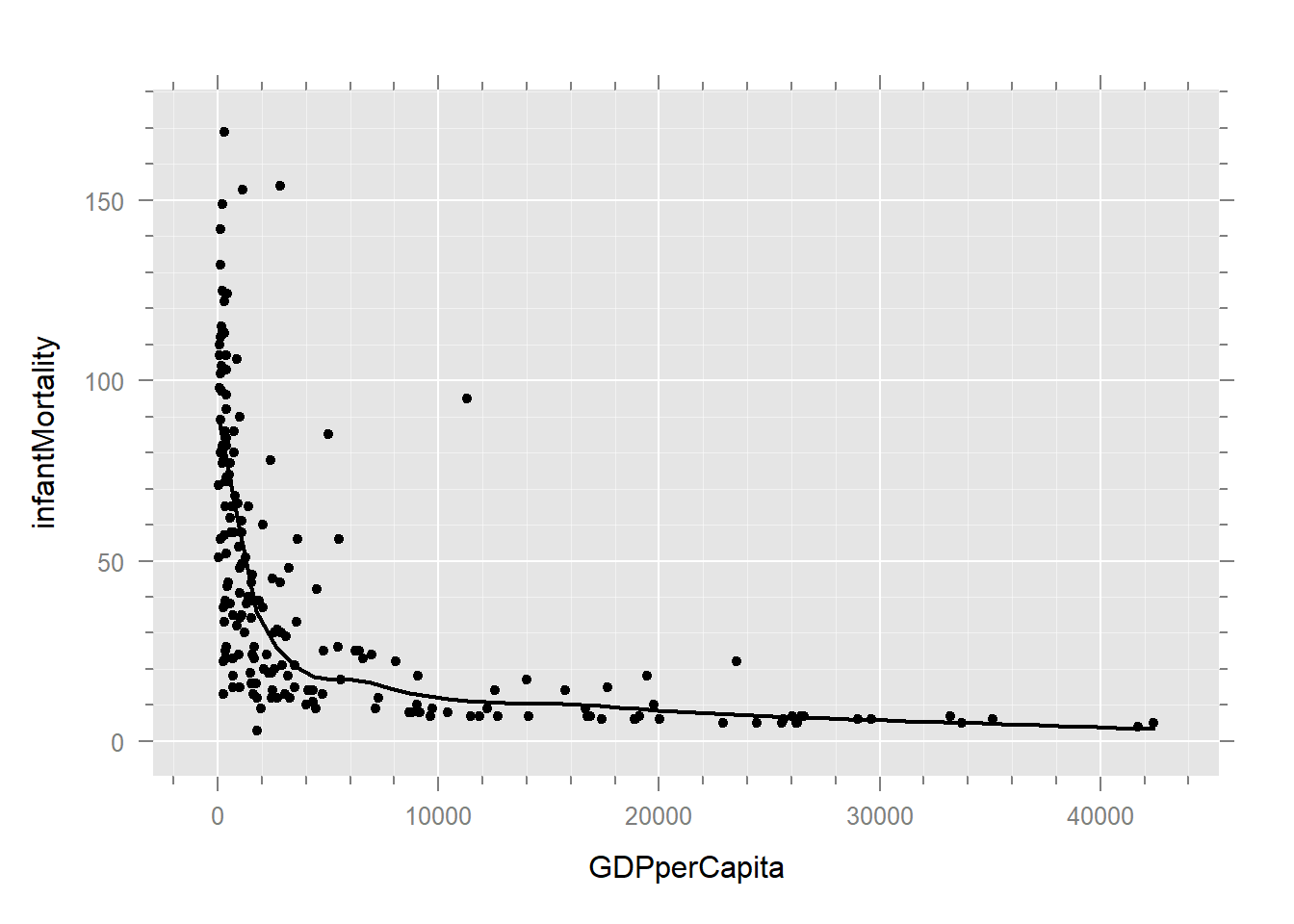

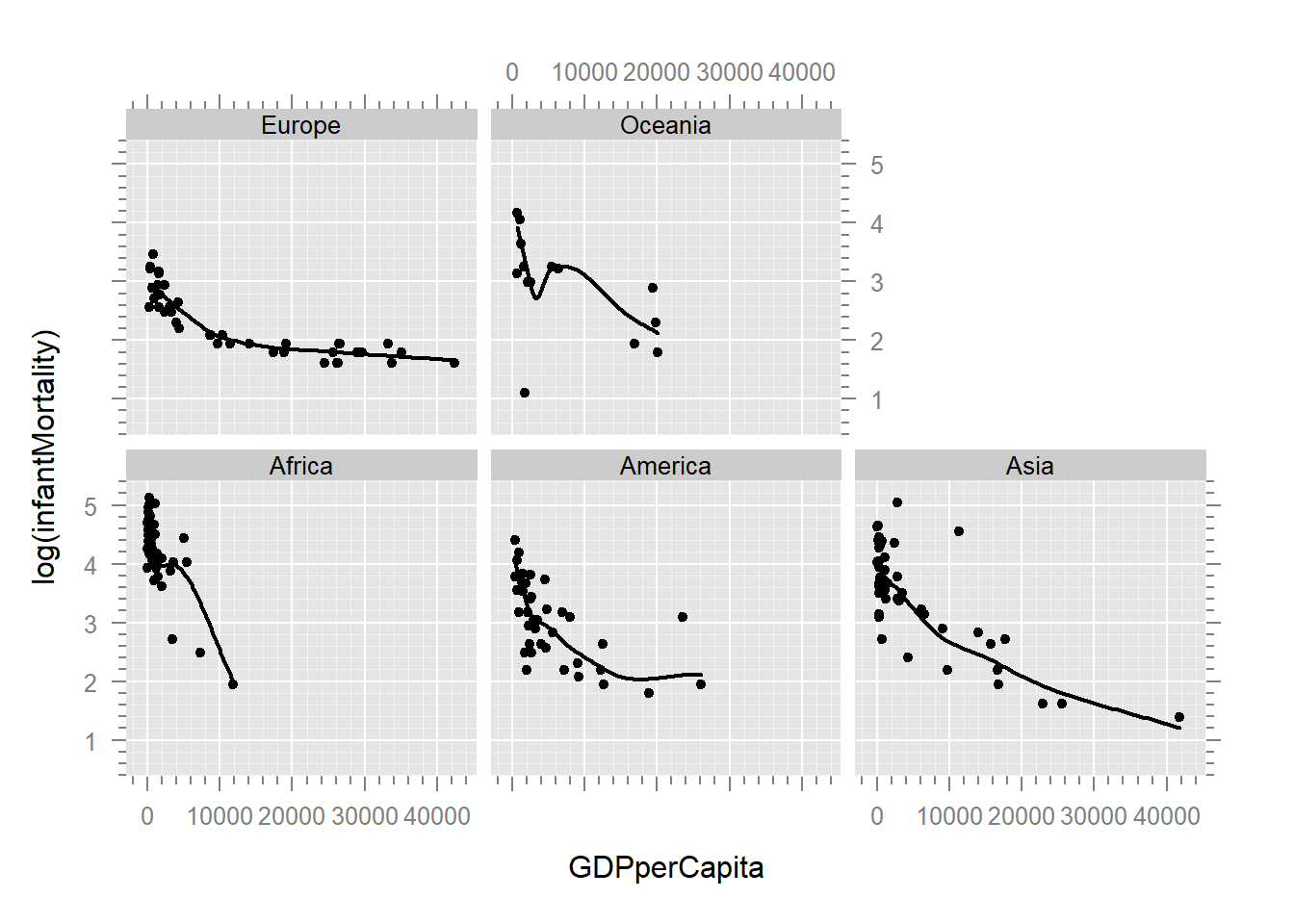

mutate(country = rownames(.)) # Assigning country namesLet’s visualize an attempt to make our regression behave. Below are the original and log-transformed relationships with ggplot2. You’ve likely used a log-transformation before, but we’ll be exploring the more general family of transformations.

The log transformation has straightened out the data, allowing us to fit a more useful regression line. However, we’re not limited to just a log transformation. We can use an entire family of transformations called Tukey’s Ladder of Powers.

40.2 Introduction to Tukey’s Ladder of Powers

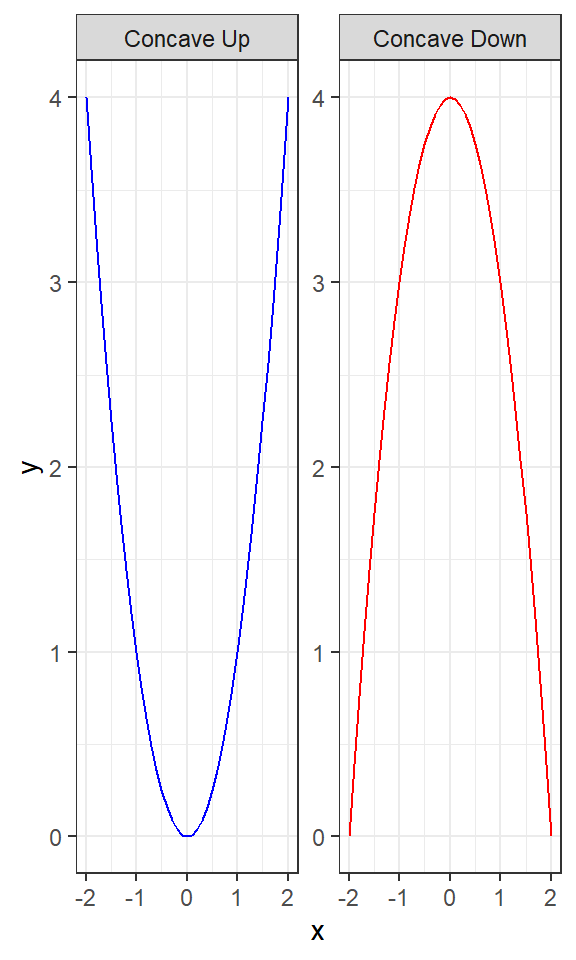

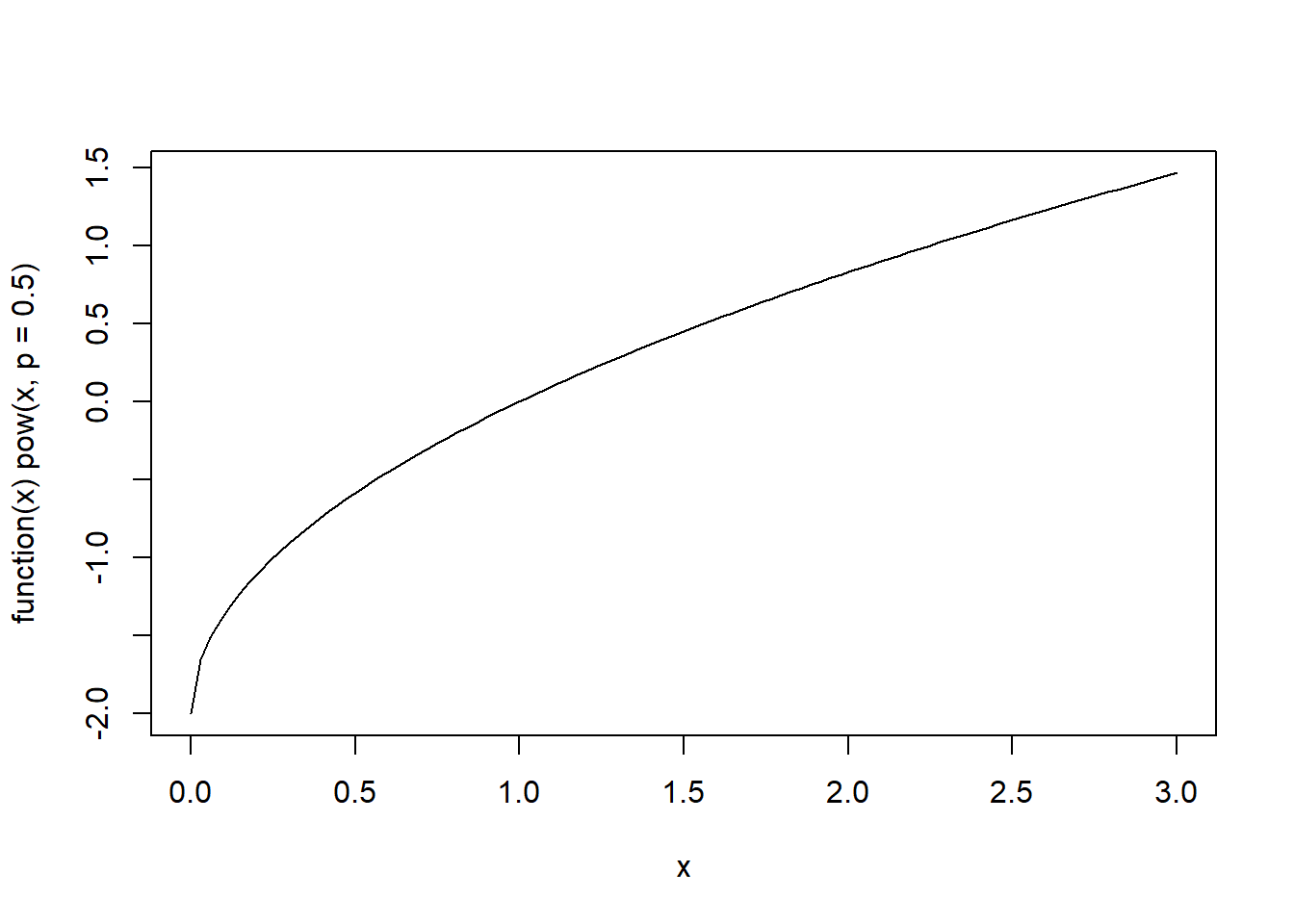

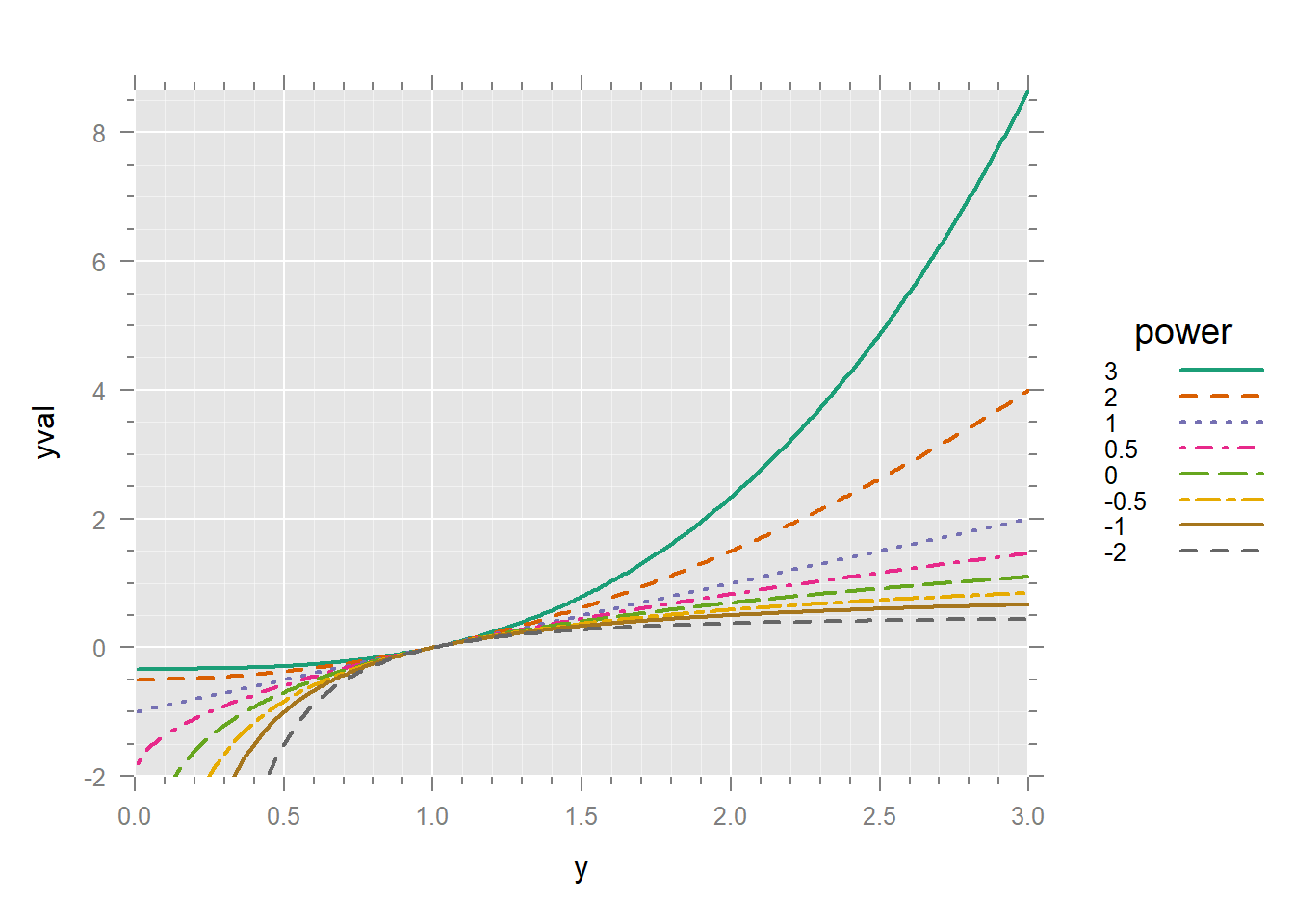

John Tukey suggested this simple toolkit, like a set drill bits of varying sizes, to modify the shape of distributions and the shape of relationships between variables. The basic idea stems from the fact that functions of the form \(y' = y^p, \quad y > 0\) have a graph that is concave up if \(p >1\), and concave down if \(0<p<1\) or \(p<0\).

Here are some examples to illustrate these concepts:

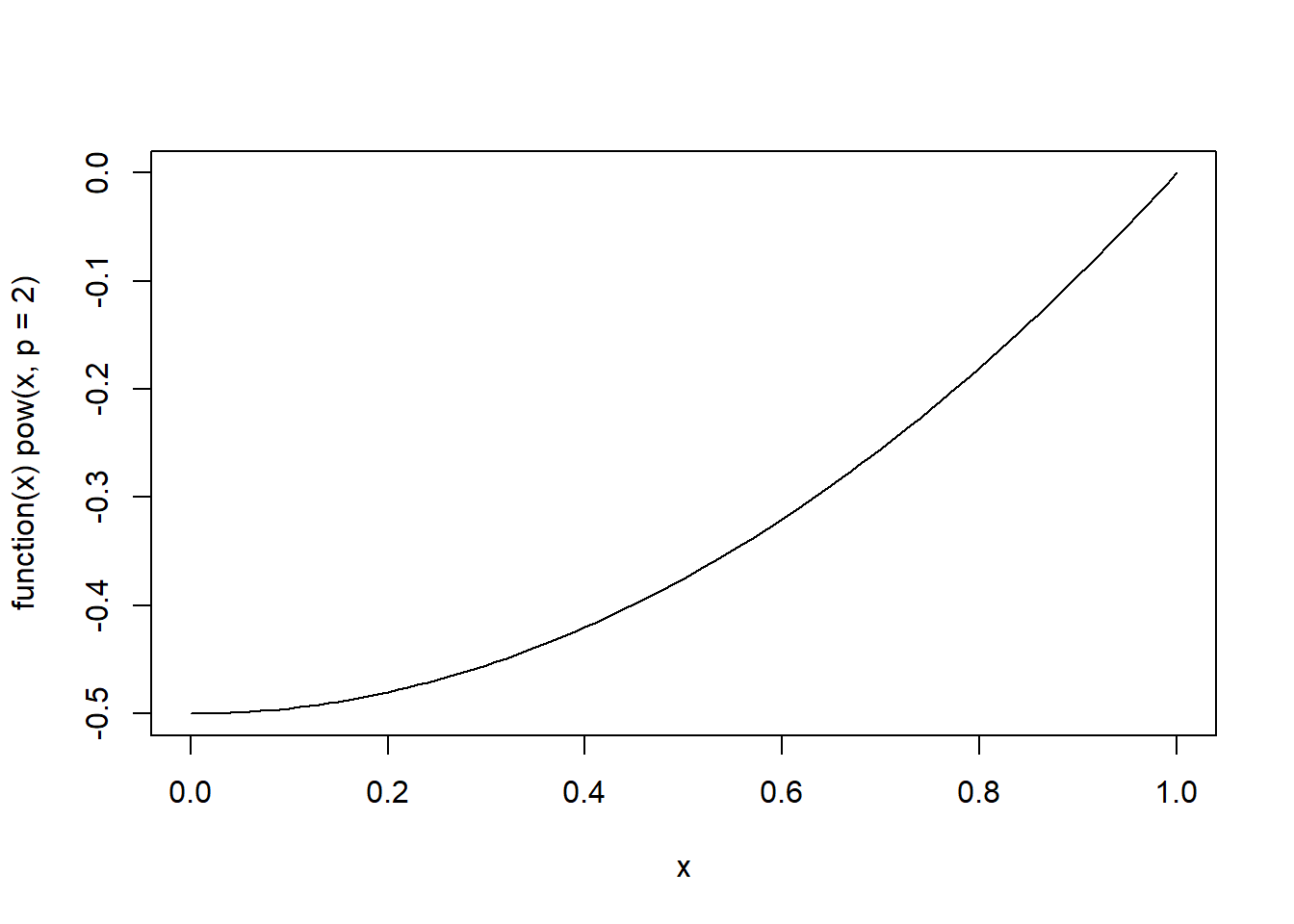

Concave Up (\(y= x^2\)): This plot shows a parabolic curve opening upwards, illustrating the concept of a concave up graph where the slope of the tangent line increases as \(x\) increases.

Concave Down (\(y= -x^2\)): Conversely, this plot shows a parabolic curve opening downwards, illustrating a concave down graph where the slope of the tangent line decreases as \(x\) increases.

We can use this information to modify the shape of a distribution or the shape of a relationship between variables by using a power transformation (\(p\)) on our data.

40.2.1 Mathematical Formulation of Tukey’s Ladder of Powers

To understand Tukey’s Ladder of Powers, let’s start with the general form of the transformation:

\[y' = \frac{y^p - 1}{p}\]

where \(p\) is the power parameter. This formula helps us adjust the shape of the data distribution. When \(p\) is positive, the transformation can help reduce right skewness. When \(p\) is negative, it can help reduce left skewness.

Special Case: \(p = 0\)

When \(p = 0\), the transformation simplifies to a logarithmic transformation. This is because as \(p\) approaches 0, the formula \(\frac{y^p - 1}{p}\) approaches \(\ln y\). This can be understood using a concept from calculus called l’Hôpital’s rule, which is used to evaluate limits of indeterminate forms. For our purposes, we can state that:

\[\lim_{p \to 0} \frac{y^p - 1}{p}= \lim_{p \to 0}\, e^{\, p \ln y} \ln y = \ln y\]

To make this concept more intuitive, imagine \(y^p\) as a function that gets closer and closer to 1 as \(p\) gets closer to 0. The subtraction of 1 and division by \(p\) in the formula adjust this function to reflect the logarithmic behavior.

40.2.2 Defining the Transformation Function in R

We define a function that produces this transformation. The easy way to define it is:

Let’s test this function with a sequence of x values and visualize the transformations for different powers of p.

# Testing the transformation with a sequence of x values

x <- seq(-1, 3, by = .5)

# Applying the transformation for different powers of p

x_transformed <- tibble(

x = x,

`p=2` = pow(x, 2),

`p=0` = pow(x, 0),

`p=-1` = pow(x, -1)

)

# Visualizing the transformed data

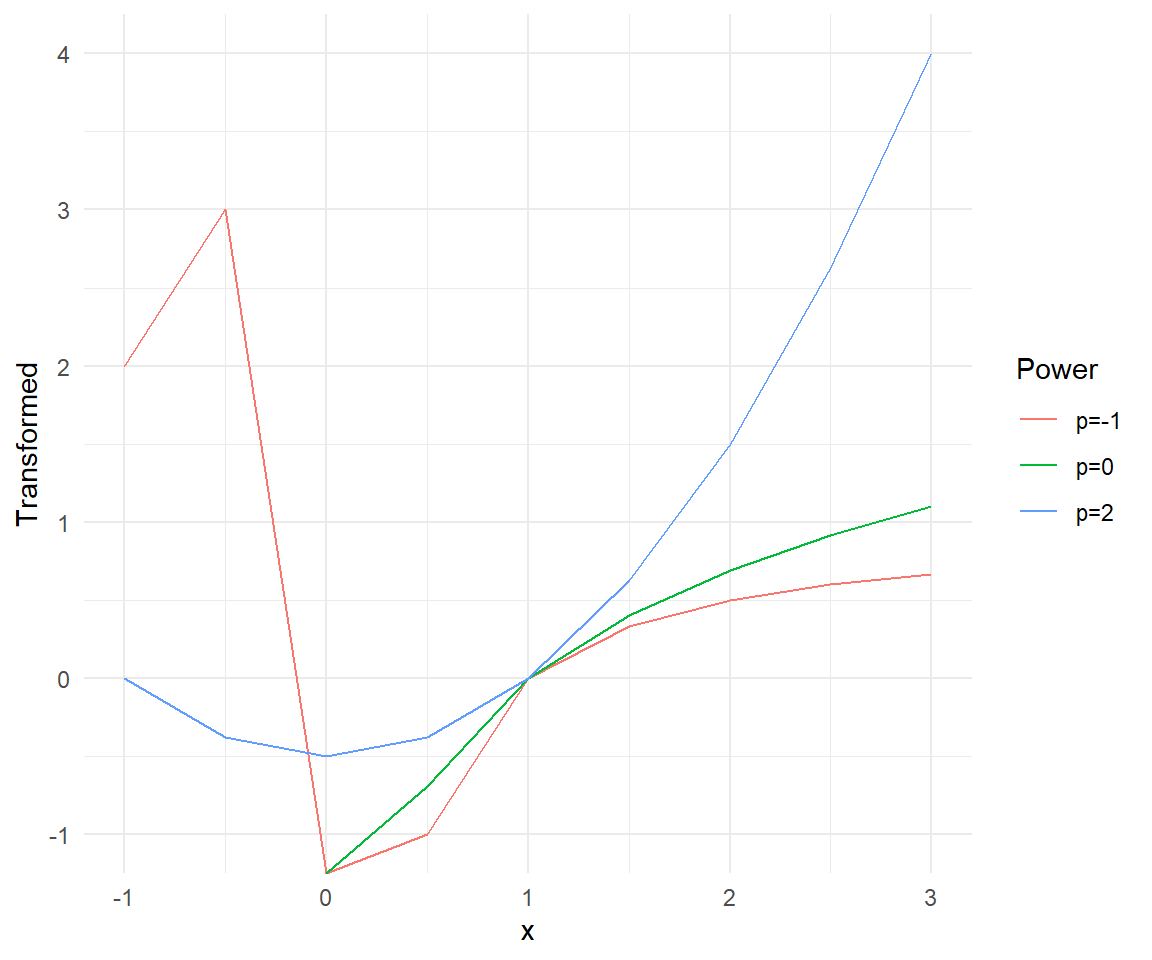

x_transformed_long <- pivot_longer(x_transformed, cols = starts_with("p"), names_to = "Power", values_to = "Transformed")Let’s visualize the transformed data for different powers of p using ggplot2.

ggplot(x_transformed_long, aes(x = x, y = Transformed, color = Power)) +

geom_line() +

labs(title = "Visualization of Power Transformations", x = "Original x", y = "Transformed x") +

theme_minimal()

Looking at the plot, we can see how different values of \(p\) affect the transformation of the data. The transformation with \(p=2\) shows a concave up shape, while \(p=-1\) shows a concave down shape. The logarithmic transformation (\(p=0\)) provides a middle ground between these two extremes.

# test:

x <- seq(-1, 3, by = .5)

x # note that these transformations are really intended for y > 0

#> [1] -1.0 -0.5 0.0 0.5 1.0 1.5 2.0 2.5 3.0

pow(x, 2)

#> [1] 0.000 -0.375 -0.500 -0.375 0.000 0.625 1.500 2.625 4.000

# pow(x, 0) %>%

# name(x)

# pow(x, -1) %>%

# name(x) %>%

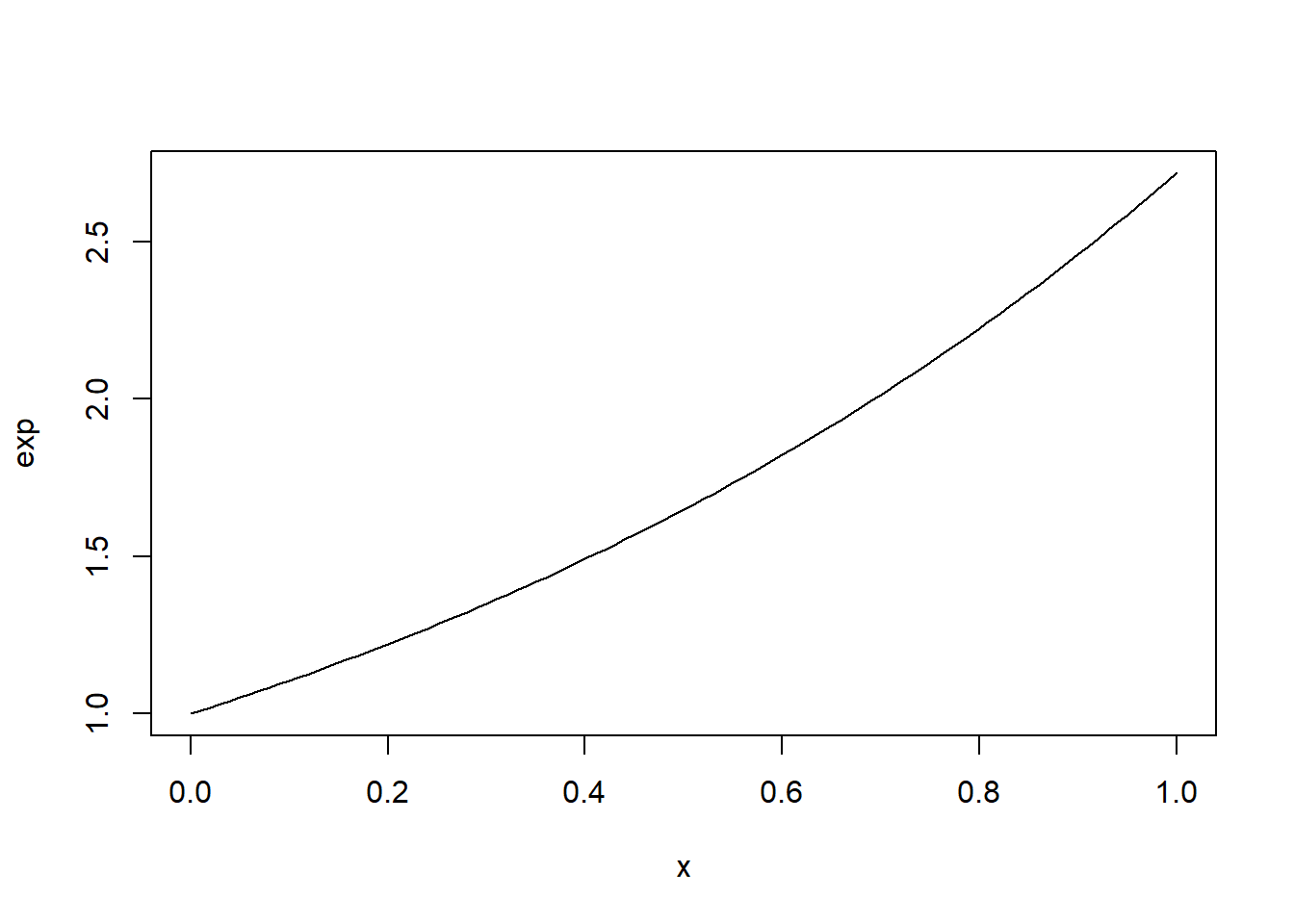

# cbind()Because functions are first-class objects in R, we can also define the exponential function and plot it directly.

However, this approach works correctly only for a single value of \(p\) because the statement if(p == 0) only tests the first element of p.

40.3 Vectorizing a function

Most operators in R are vectorized so they work element-wise when their arguments are vectors. When the arguments have incompatible lengths, the shorter argument is recycled to have the same length as the longer one. That is why the following produces sensible results:

z <- c(3, 5, 9)

z + c(1, 1, 1)

#> [1] 4 6 10

z + 1 # 1 is recycled so the result is equivalent to the previous line

#> [1] 4 6 10

z + c(1, 2, 3)

#> [1] 4 7 12

z + c(1, 2) # recycles but gives a warning

#> Warning in z + c(1, 2): longer object length is not a multiple of shorter

#> object length

#> [1] 4 7 10

z + z

#> [1] 6 10 18

z^2

#> [1] 9 25 81

z^z

#> [1] 2.70e+01 3.12e+03 3.87e+08We can use ifelse which works on a vector instead of a single value.

pow <- function(y, p) {

p <- rep(p, length.out = length(y))

y <- rep(y, length.out = length(p))

ifelse(p == 0, log(y), (y^p - 1) / p)

}

# To apply the function over vectors of y and p, ensuring vectorized operations:

vectorized_pow <- function(y, p) {

map2(y, p, pow)

}

# test:

pow(-1:4, c(2, 0, -1, 1, 3))

#> Warning in log(y): NaNs produced

#> [1] 0.00 -Inf 0.00 1.00 8.67 7.50

pow(-1:4, 2)

#> [1] 0.0 -0.5 0.0 1.5 4.0 7.5With a bit more work, we can avoid unnecessary evaluations:

pow <- function(y, p) {

p <- rep(p, length.out = length(y))

y <- rep(y, length.out = length(p))

y[p == 0] <- log(y[p == 0])

y[p != 0] <- (y[p != 0]^p[p != 0] - 1) / p[p != 0]

y

}

# Test:

pow(1:10, 0) == log(1:10)

#> [1] TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE TRUE

pow(1:10, -1)

#> [1] 0.000 0.500 0.667 0.750 0.800 0.833 0.857 0.875 0.889 0.900

pow(1:10, .5)

#> [1] 0.000 0.828 1.464 2.000 2.472 2.899 3.292 3.657 4.000 4.325

pow(1:10, -1:8)

#> [1] 0.00e+00 6.93e-01 2.00e+00 7.50e+00 4.13e+01 3.24e+02 3.36e+03 4.37e+04

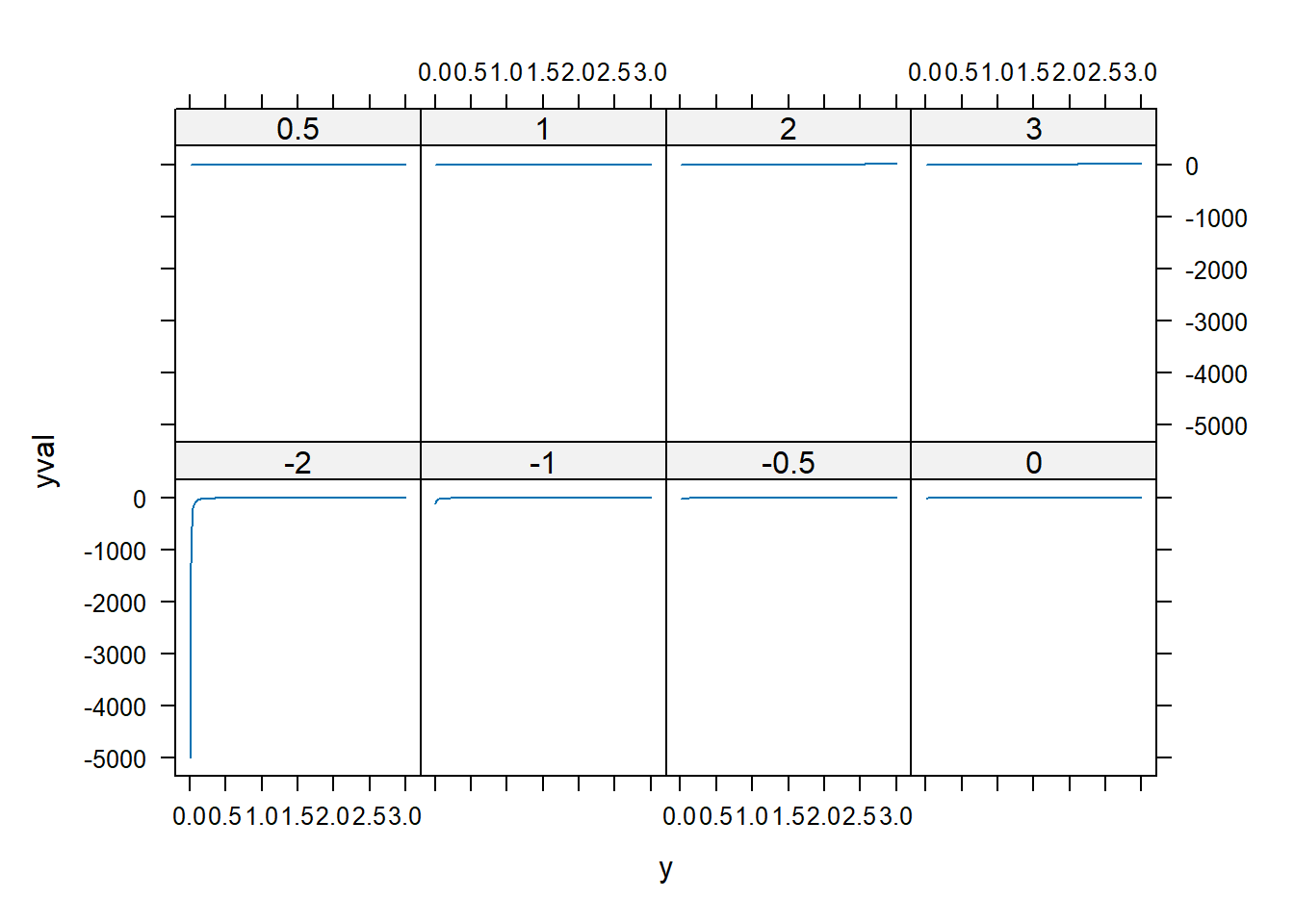

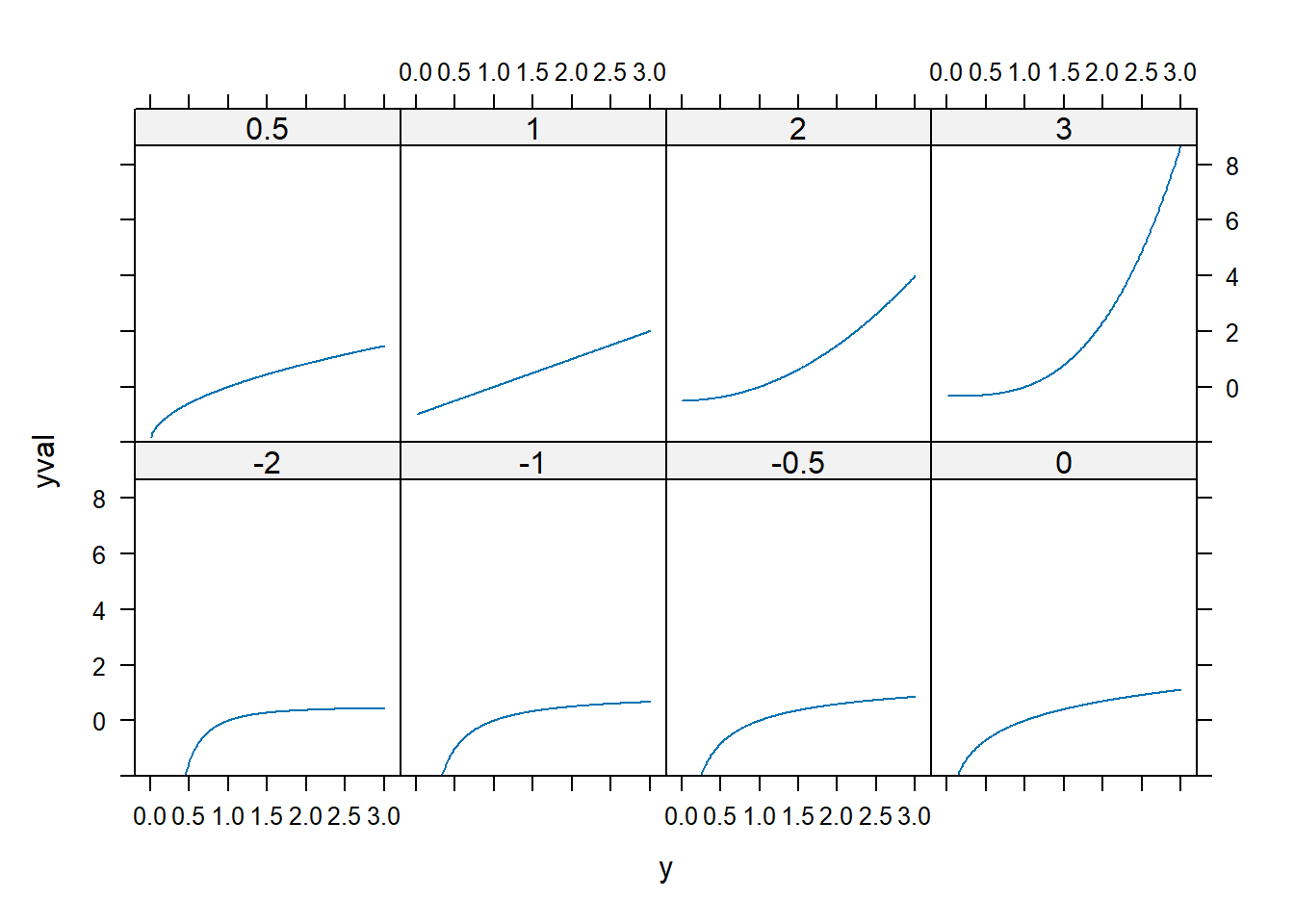

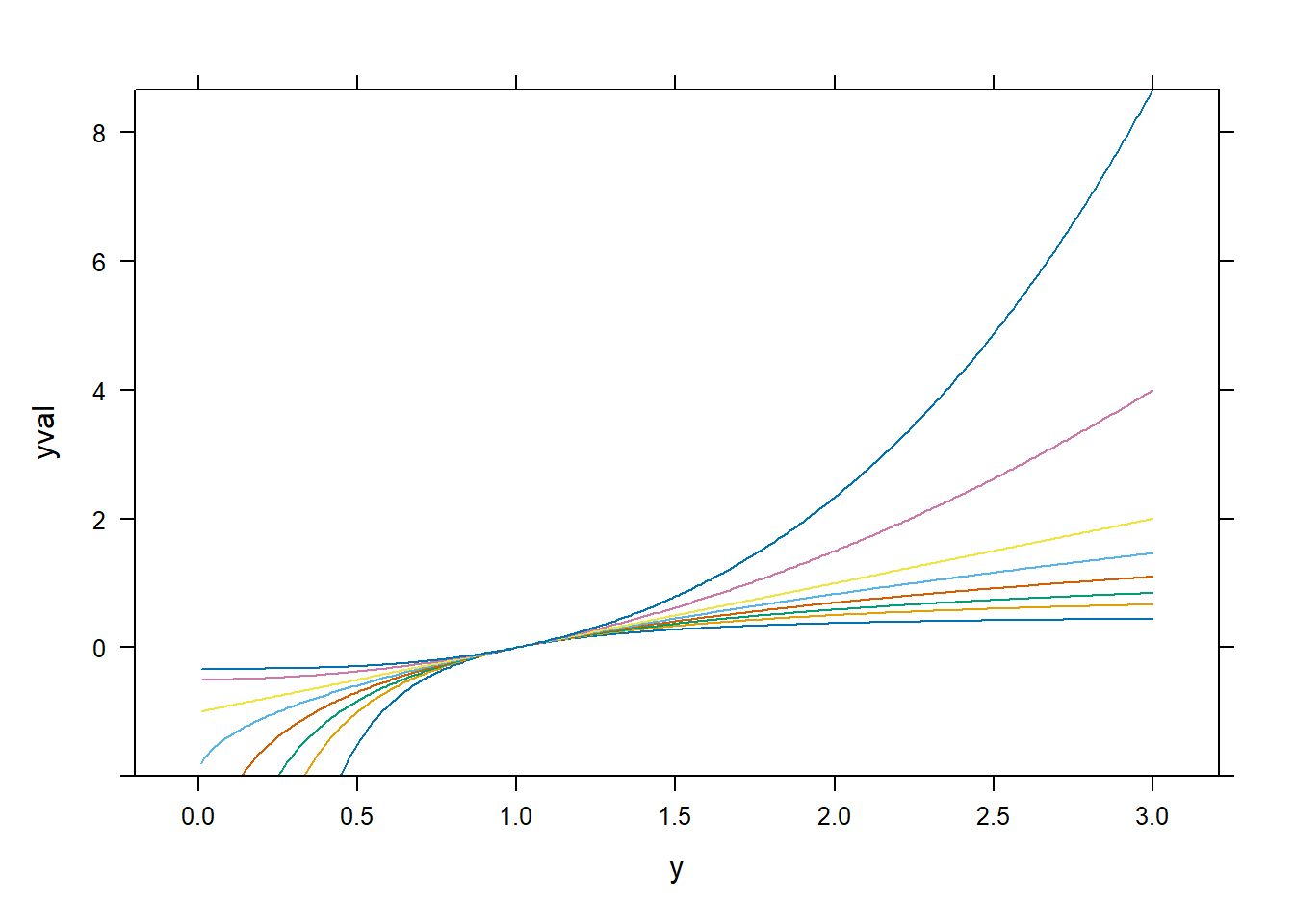

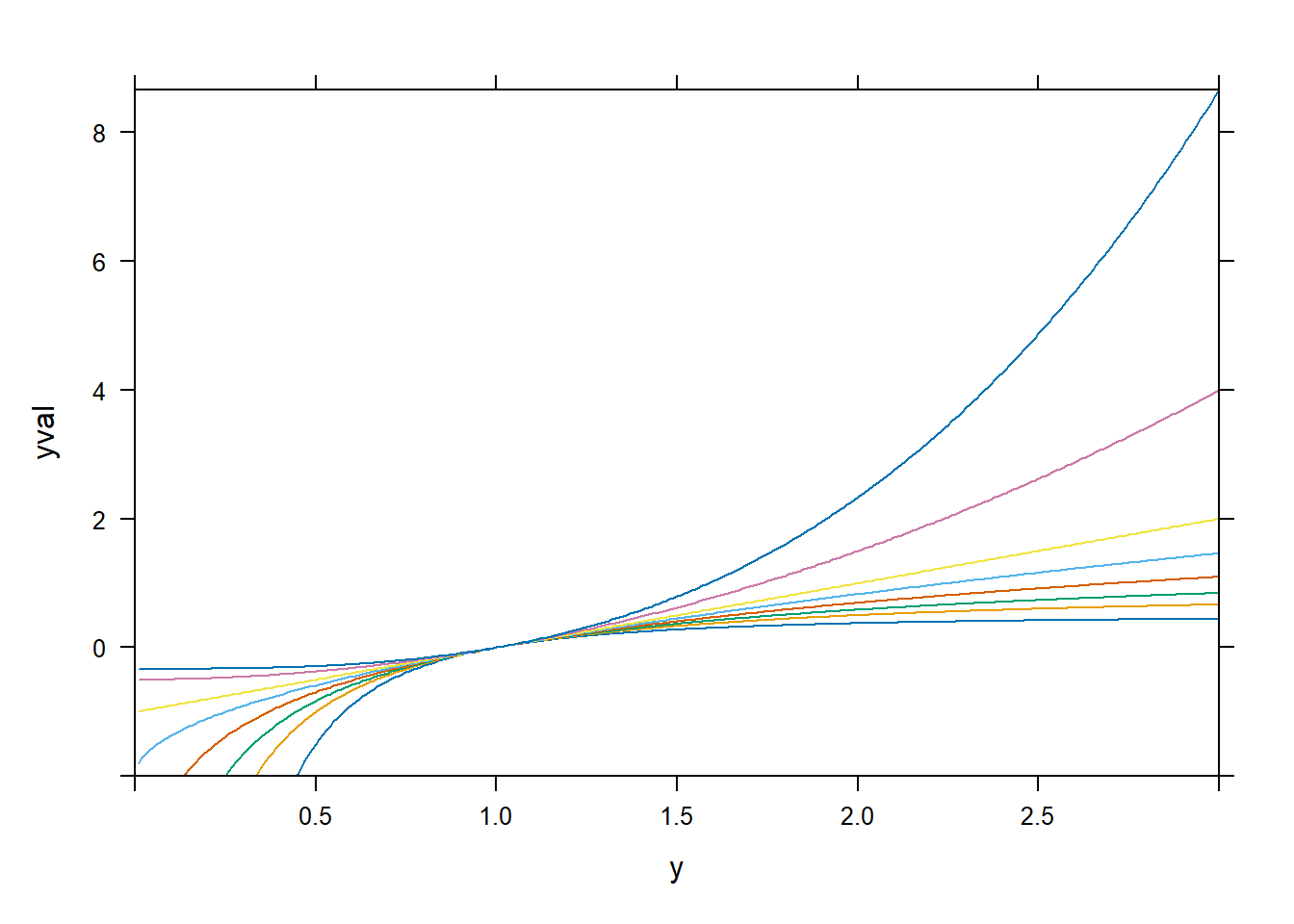

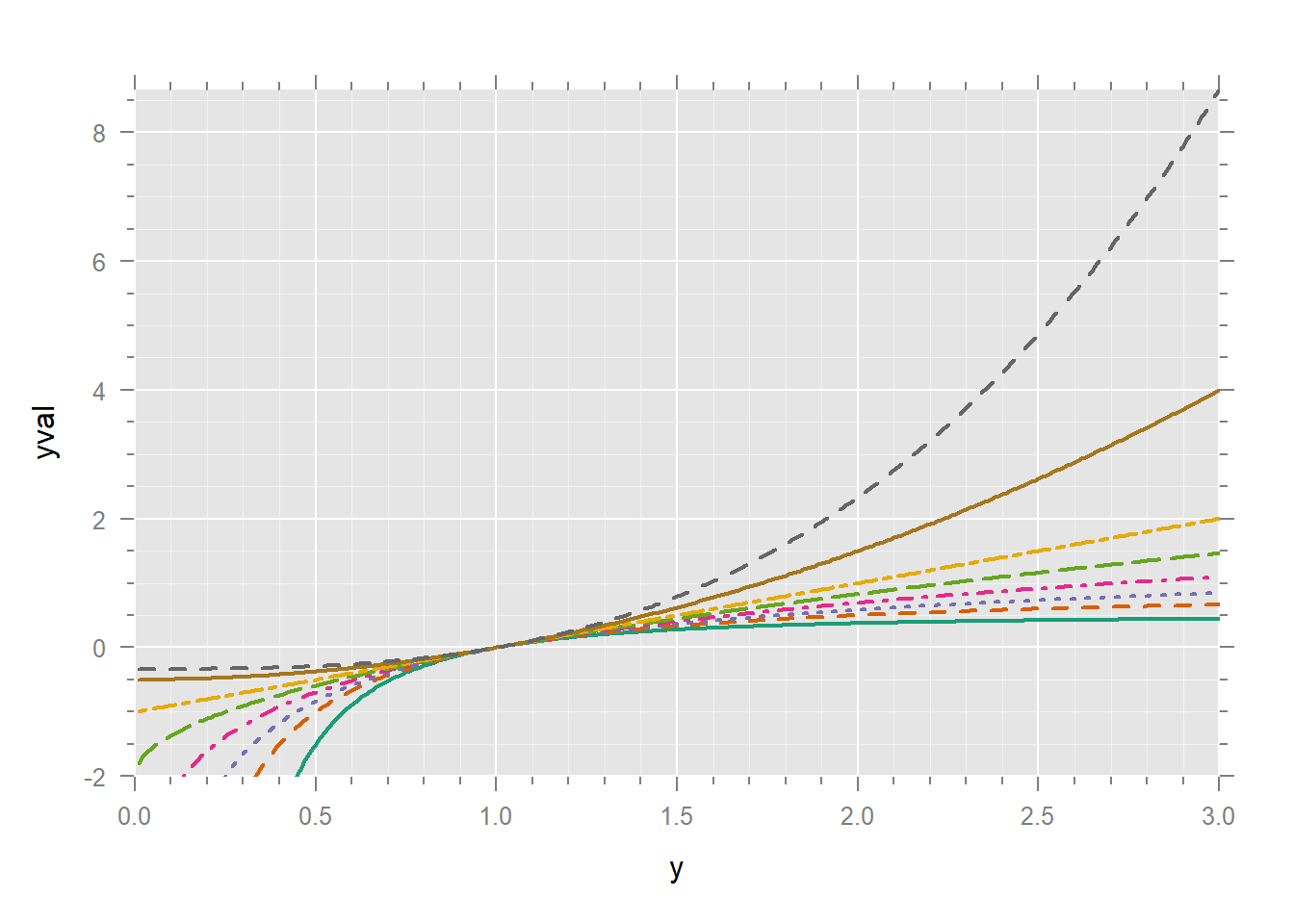

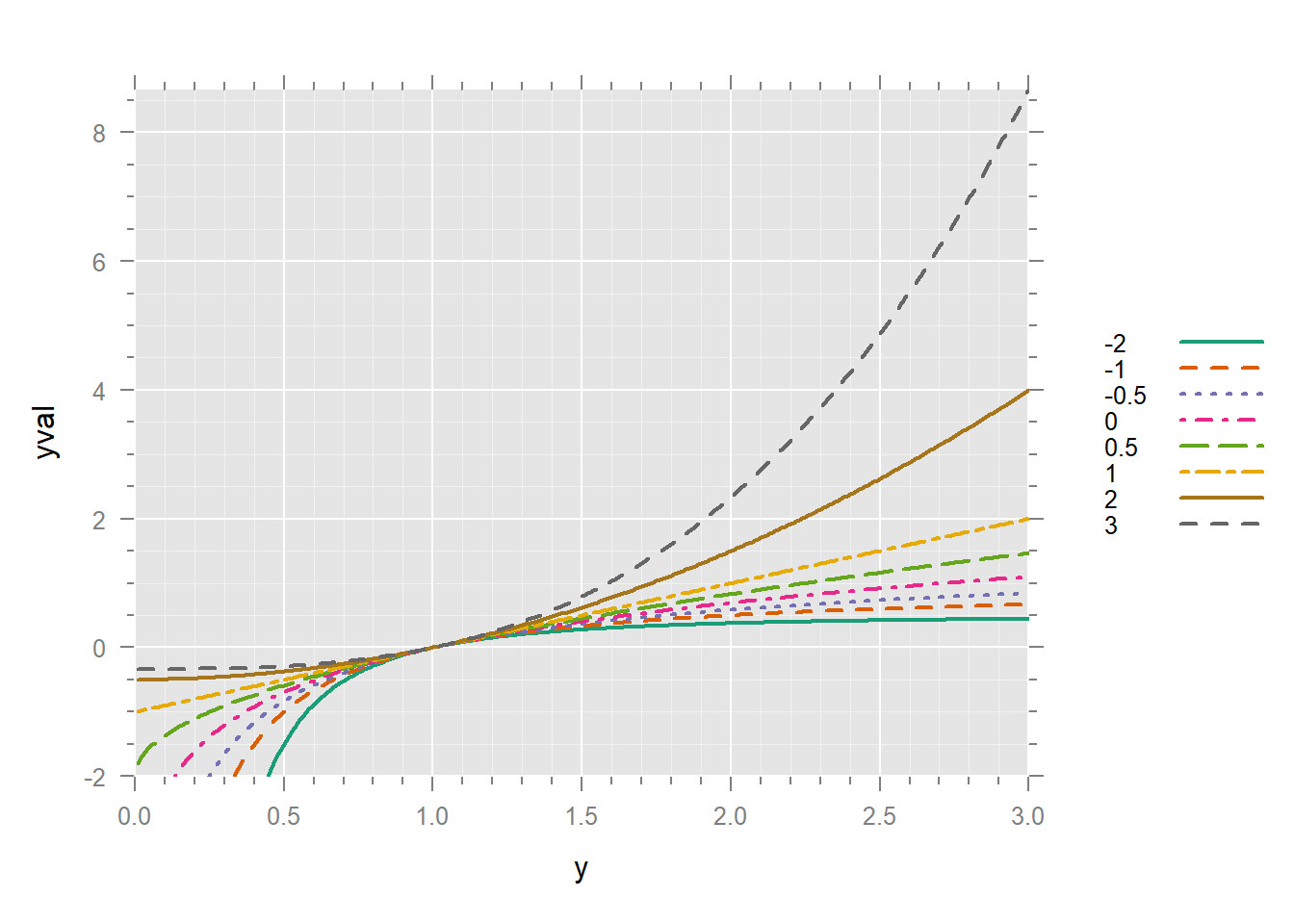

#> [9] 6.83e+05 1.25e+07Let’s plot this transformation for a range of values of \(p\). The value of expand.grid is a data frame whose rows consist of the Cartesian product (i.e. all possible combinations) of its arguments.

expand.grid(a = c("A", "B"), x = 1:3)

#> a x

#> 1 A 1

#> 2 B 1

#> 3 A 2

#> 4 B 2

#> 5 A 3

#> 6 B 3

dd <- expand.grid(y = seq(.01, 3, .01), p = c(-2, -1, -.5, 0, .5, 1, 2, 3))

dim(dd)

#> [1] 2400 2

head(dd)

#> y p

#> 1 0.01 -2

#> 2 0.02 -2

#> 3 0.03 -2

#> 4 0.04 -2

#> 5 0.05 -2

#> 6 0.06 -2

some(dd) # 10 rows at random

#> y p

#> 193 1.93 -2.0

#> 697 0.97 -0.5

#> 767 1.67 -0.5

#> 979 0.79 0.0

#> 1515 0.15 1.0

#> 1718 2.18 1.0

#> 1848 0.48 2.0

#> 2172 0.72 3.0

#> 2199 0.99 3.0

#> 2219 1.19 3.0

dd$yval <- with(dd, pow(y, p))

xyplot(yval ~ y | factor(p), dd, type = "l")

spida2::gd(8, lwd = 2) # number of colours needed

xyplot(yval ~ y, dd,

groups = p,

type = "l",

xlim = c(0, 3),

ylim = c(-2, max(dd$yval))

)

xyplot(yval ~ y, dd,

groups = p,

type = "l",

auto.key = list(space = "right", lines = T, points = F),

xlim = c(0, 3),

ylim = c(-2, max(dd$yval))

)

It’s much better to have the legend in the same order as the lines in the graph. We can turn p into a factor and reverse its order.

dd$po <- factor(dd$p)

dd$po <- reorder(dd$po, -dd$p)

xyplot(yval ~ y, dd,

groups = po,

type = "l",

auto.key = list(space = "right", lines = T, points = F, title = "power"),

xlim = c(0, 3),

ylim = c(-2, max(dd$yval))

)

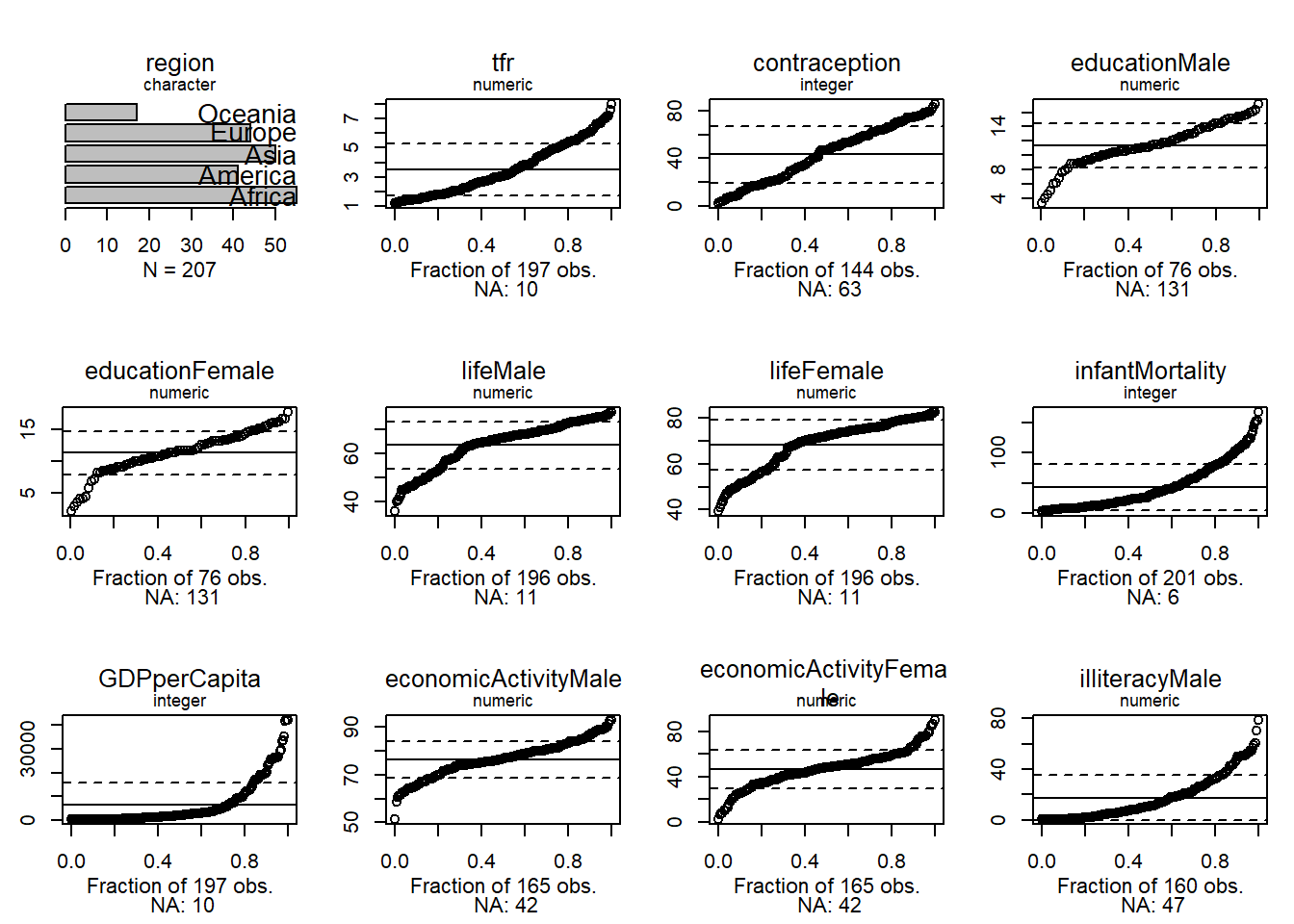

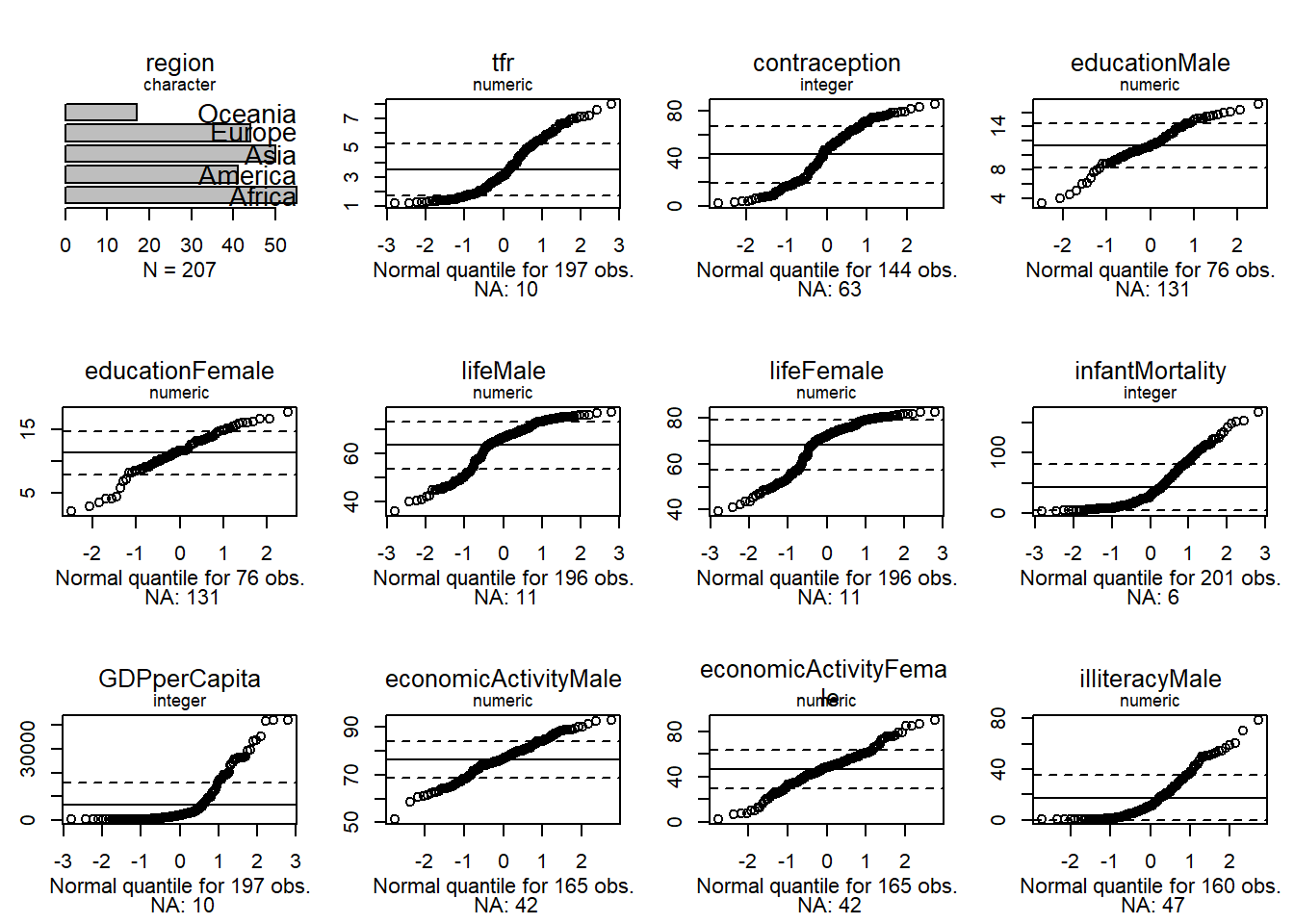

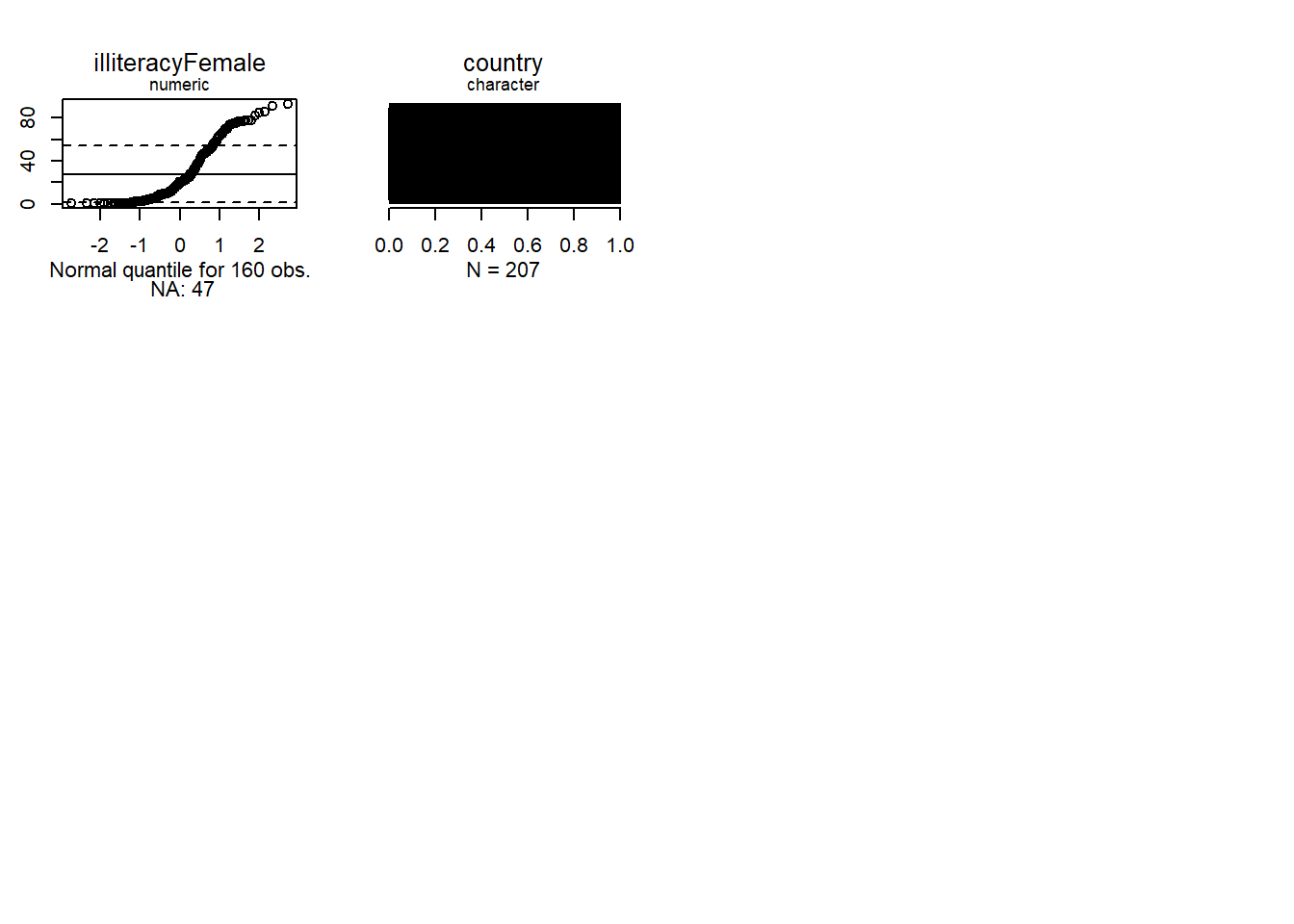

40.4 Quantile plots

Uniform quantiles let us see how far a distribution is from normal.

Normal quantiles let us see how far a distribution is from normal.

We see that none of the numeric variables have normal distributions.

- ‘age’ is somewhat platykurtic compared with a normal

- ‘compositeHourlyWages’ has both a categorical (0) and a continuous component

- ‘education’ is also platykurtic

- ‘working’ is dichotomous

- ‘familyIncome’ is skewed to the right

Note that the fact that \(x\) or \(y\) variables are not normal does not mean that the conditional distribution of \(y\) given \(x\) is not normal.

Let’s explore wages of working women as a function of education.

library(latticeExtra)

un %>%

xyplot(infantMortality ~ GDPperCapita, .) +

layer(panel.loess(..., lwd = 2))

# Scatterplot showing curvature in relationship

trellis.focus()

panel.identify(labels = rownames(un))

#> integer(0)

trellis.unfocus()

un %>% subset(country %in% c("United.States", "Canada"))

#> region tfr contraception educationMale educationFemale lifeMale

#> Canada America 1.61 66 17.2 17.8 76.1

#> United.States America 1.96 71 15.4 16.2 73.4

#> lifeFemale infantMortality GDPperCapita economicActivityMale

#> Canada 81.8 6 18943 72.4

#> United.States 80.1 7 26037 74.9

#> economicActivityFemale illiteracyMale illiteracyFemale

#> Canada 57.6 NA NA

#> United.States 59.3 2.24 2.23

#> country

#> Canada Canada

#> United.States United.StatesBelow would be a 3D plot showing curvature. However, we can’t get the p3d package to install properly right now. The 3D plot would help visualize curvature between wage and education, and heteroskedasticity in wage as a function of education.

if (require("p3d")) {

if(FALSE){

library(p3d)

un %>%

xyplot(sqrt(wage) ~ education, .) +

layer(panel.loess(...))

Init3d()

Plot3d(log(infantMortality) ~ GDPperCapita + lifeFemale | region, un)

Id3d()

Id3d('United.States')

Id3d('Canada')

rownames(un)

names(un)

}

}

#> Loading required package: p3d

#> Loading required package: rgl

#> Loading required package: mgcv

#> Loading required package: nlme

#>

#> Attaching package: 'nlme'

#> The following object is masked from 'package:spida2':

#>

#> getData

#> The following object is masked from 'package:dplyr':

#>

#> collapse

#> This is mgcv 1.9-4. For overview type '?mgcv'.

#>

#> Attaching package: 'p3d'

#> The following objects are masked from 'package:spida2':

#>

#> cell, center, ConjComp, dell, disp, ell, ell.conj, ellbox, ellplus,

#> ellpt, ellptc, elltan, elltanc, uv

#> The following object is masked from 'package:car':

#>

#> Identify3d

#> The following object is masked from 'package:knitr':

#>

#> spin40.5 Box Cox Transformation

Box-Cox is the same basic idea, framed as a parameter estimation problem. The transformation is:

\[ y(\lambda) = \begin{cases} \frac{y^\lambda - 1}{\lambda}, & \text{if }\lambda \neq 0 \\ \ln(y), & \text{if } \lambda = 0 \end{cases} \]

The Box-Cox transformation is similar to Tukey’s Ladder of Powers, with the parameter \(\lambda\) playing a role analogous to \(p\). The transformation is used to stabilize variance and make the data more normally distributed. I won’t go into more detail here since it’s very similar to what we’ve already discussed. But I have published a paper that used the Box-Cox transformation: Garrison, S. M. & Rodgers, J. L. (2019) Decomposing the causes of the socioeconomic status-health gradient with biometrical modeling. Journal of Personality and Social Psychology, 116(4), 616–634. https://doi.org/10.1037/pspp0000226 if case you’re curious to see it in action.

40.5.1 Video Explanation

This video was made by math et al. I like their channel and found this video to be a good one.

40.5.2 Additional Resources

Salvatore S. Mangiafico’s Summary and Analysis of Extension Program Evaluation in R, rcompanion.org/handbook/. Pdf version

http://blackwell.math.yorku.ca/math4939/lectures/transforming_data_tukeys_ladder_of_powers.html

https://thomaselove.github.io/431-notes/re-expression-tukeys-ladder-box-cox-plot.html