40 Plots Behaving Badly: Lessons in Data Misrepresentation

This section explores common pitfalls in data visualization, inspired by Karl W. Broman’s talk on how default plotting choices, like those in Microsoft Excel, can “obscure your data and annoy your readers”. We’ll examine how to intentionally create bad plots to understand good design practices better. Broman’s lecture was inspired by (Howard Wainer’s 1984 paper: How to display data badly. American Statistician 38(2): 137–147)[https://www.jstor.org/stable/2683253]. Wainer was the first to set forth the principles of the bad display of data. But according to Karl “The now widespread use of Microsoft Excel has resulted in remarkable advances in the field.”

40.1 General Principles

The aims of good data graphics is to display data accurately and clearly. Some rules for displaying data badly:

- Display as little information as possible.

- Obscure what you do show (with chart junk).

- Use pseudo-3d and color gratuitously.

- Make a pie chart (preferably in color and 3d).

- Use a poorly chosen scale.

- Ignore significant figures.

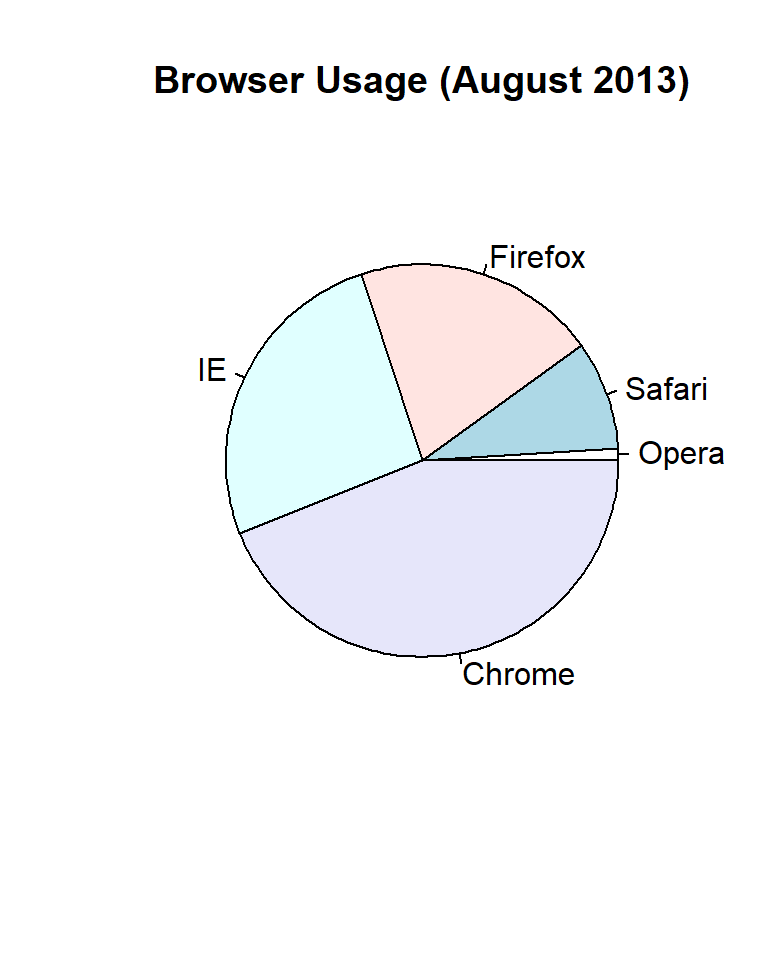

40.1.1 The Problem with Pie Charts

Pie charts are criticized for their inefficiency in conveying information. They do not allow easy comparison of sections as humans are better at comparing lengths, not areas. Here’s an example using browser usage data from an August 2013 poll:

40.1.1.1 Displaying Browser Usage Data with a Pie Chart

Consider a poll asking about browser preferences collected in August 2013. The typical display method would be a pie chart:

browsers <- c(Opera = 1, Safari = 9, Firefox = 20, IE = 26, Chrome = 44)

pie(browsers, main = "Browser Usage (August 2013)")

40.1.1.2 Why Pie Charts Fail

The pie function’s help file itself warns against using pie charts for presentation:

Pie charts are a very bad way of displaying information. The eye is good at judging linear measures and bad at judging relative areas. A bar chart or dot chart is a preferable way of displaying this type of data.

To test this, try determining the exact market shares of the browsers from the pie chart above. It’s challenging to assess precise proportions just by looking at the angles and areas.

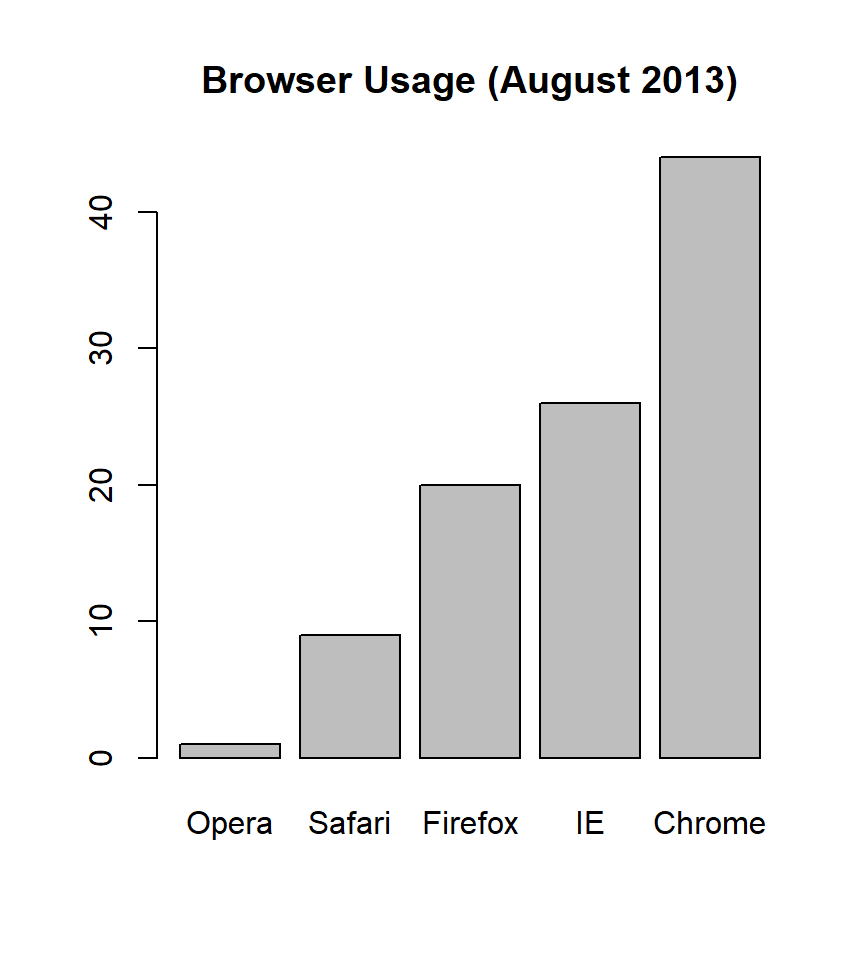

40.1.1.3 A Better Alternative: Bar Charts

If we need to visualize this data more effectively, a bar chart is more appropriate:

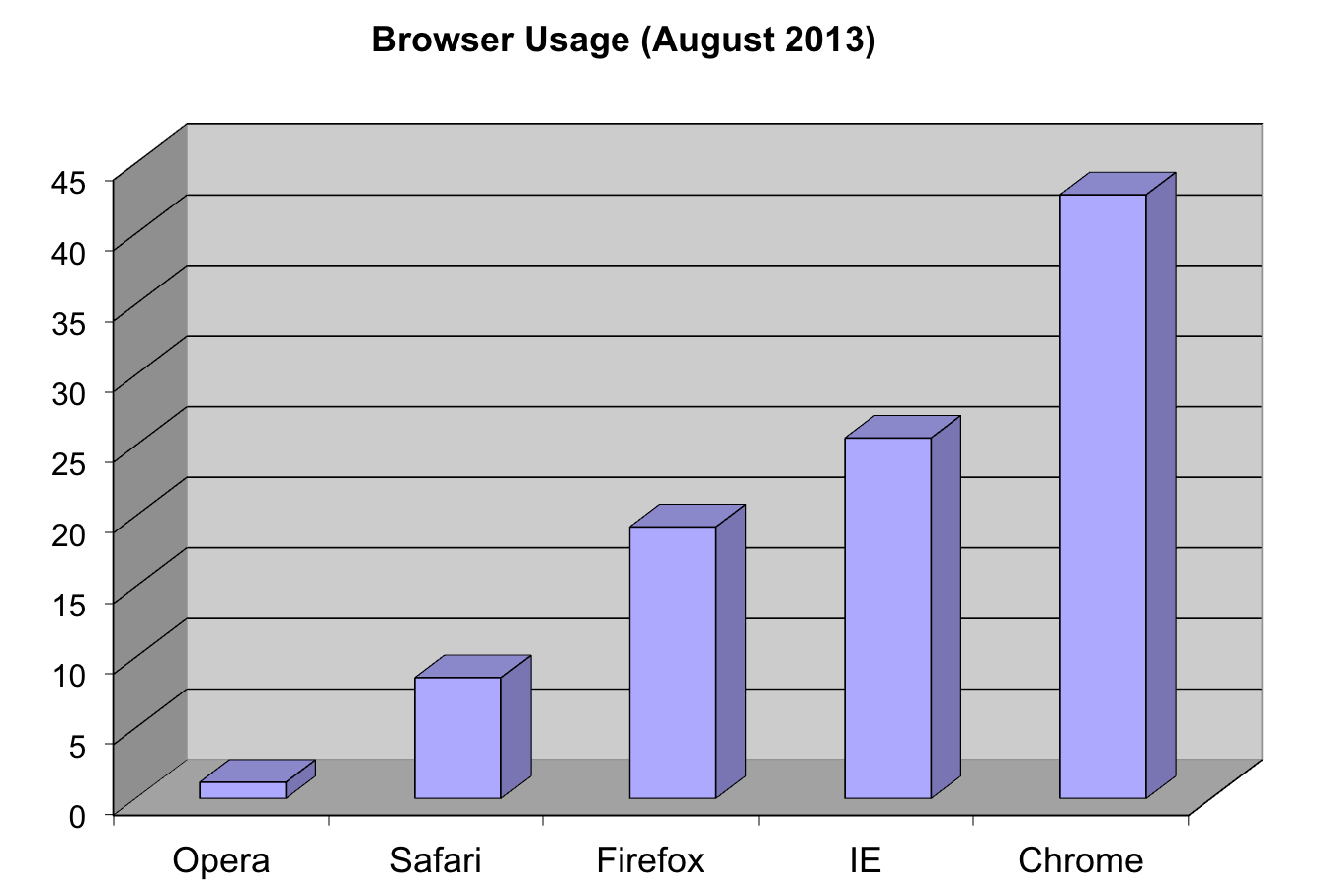

The bar chart aligns the data vertically, allowing for easy comparison across browsers. You can follow a horizontal line across to the x-axis to find precise percentages, avoiding the visual estimation errors common with pie charts. (Do avoid 3-D version as they obfuscate the plot and remove this particular advantage.)

The bar chart aligns the data vertically, allowing for easy comparison across browsers. You can follow a horizontal line across to the x-axis to find precise percentages, avoiding the visual estimation errors common with pie charts. (Do avoid 3-D version as they obfuscate the plot and remove this particular advantage.)

Note that even worse that piecharts are donut plots.

The reason is that by removing the center we remove one of the visual cues for determining the different areas: the angles. There is no reason to ever use a donut to display data.

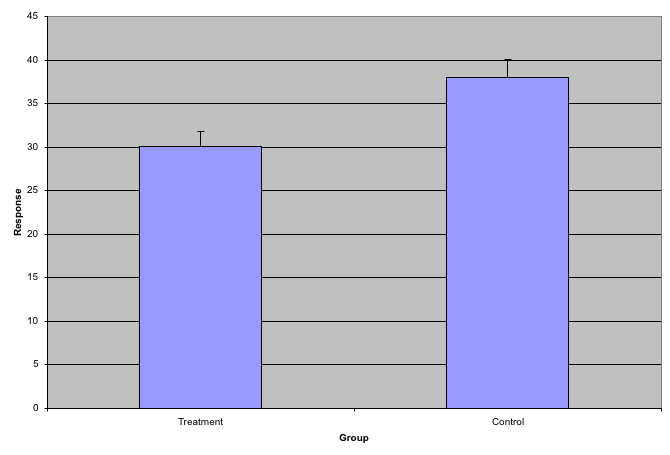

40.1.2 Barplots as data summaries

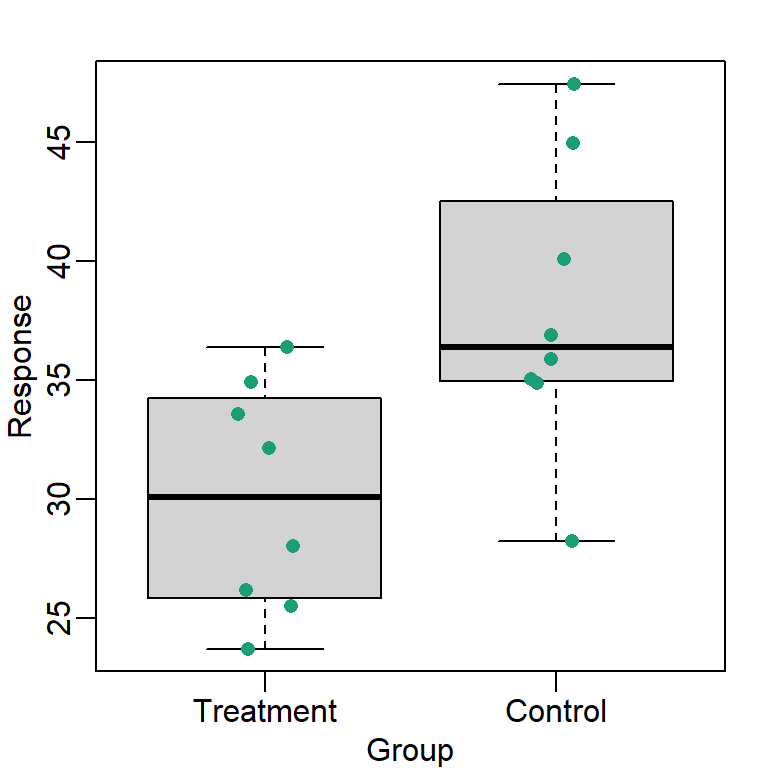

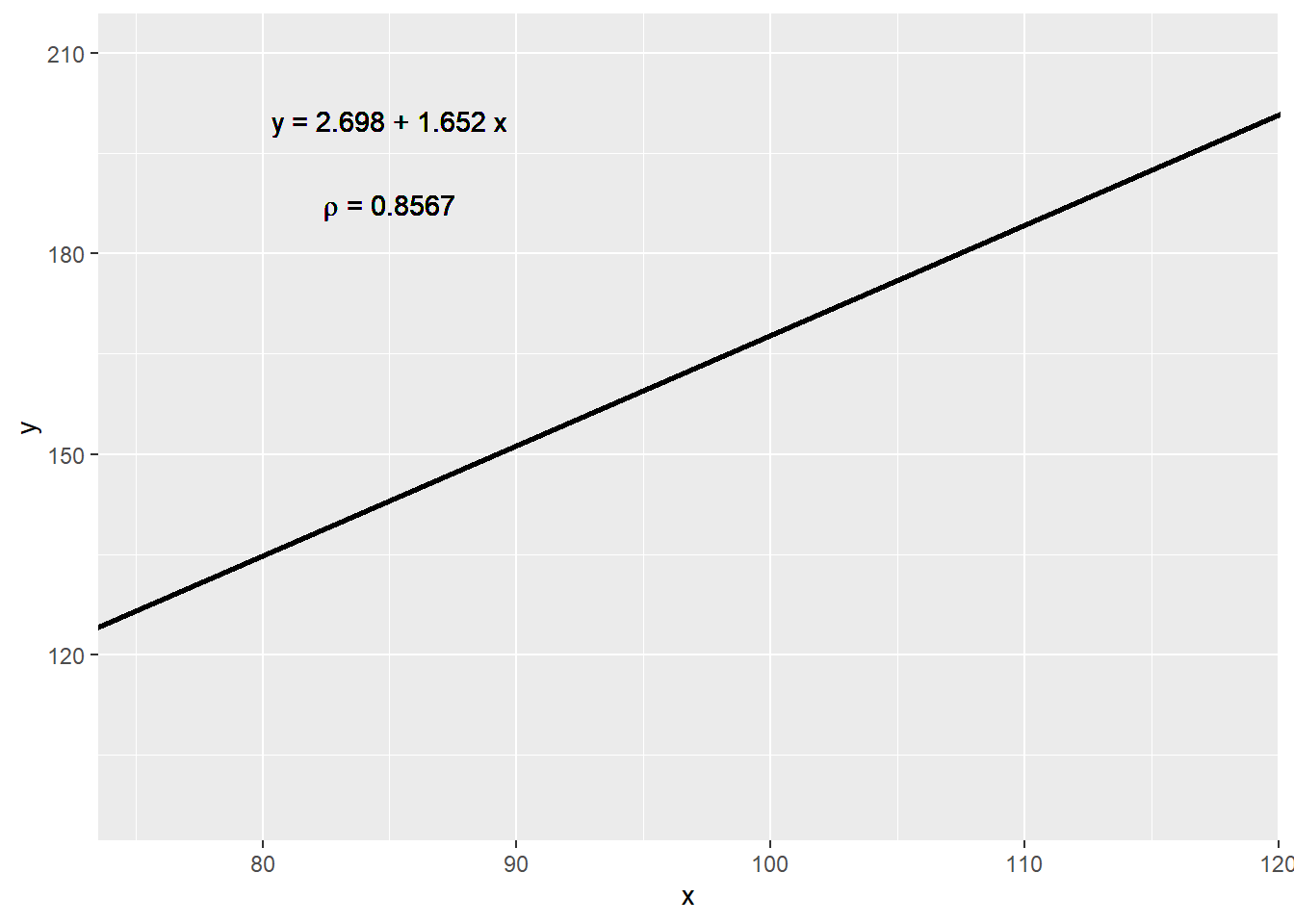

Although barplots are useful for showing percentages, they are incorrectly used to display data from two groups begin compared. Specifically, barplots are created with height equal to the group means and an antenna is added at the top to represent standard errors. This plot is simply showing two numbers per groups and the plot adds nothing:

Much more informative is to summarizing with a boxplot. If the number of points is small enough, we might as well add them to the plot. When the number of points is too large for us to see them, just showing a boxplot is preferable.

# library("downloader")

# tmpfile <- tempfile()

# download("https://github.com/kbroman/Talk_Graphs/raw/master/R/fig1.RData")

# load("data/badgraphfig1.RData")

library(tidyverse)

library(rafalib)

df_fig1 <- read.csv("data/bromanfig1.csv")

mypar(1, 1)

dat <- list(

Treatment = df_fig1$x,

Control = df_fig1$y

)

dat %>%

boxplot(

xlab = "Group",

ylab = "Response",

cex = 0

)

dat %>%

stripchart(

vertical = TRUE,

method = "jitter",

pch = 16,

add = TRUE,

col = 1

)

Note how much more we see here: the center, spread, range and the points themselves while in the barplot we only see the mean and the SE and the SE has more to do with sample size than the spread of the data.

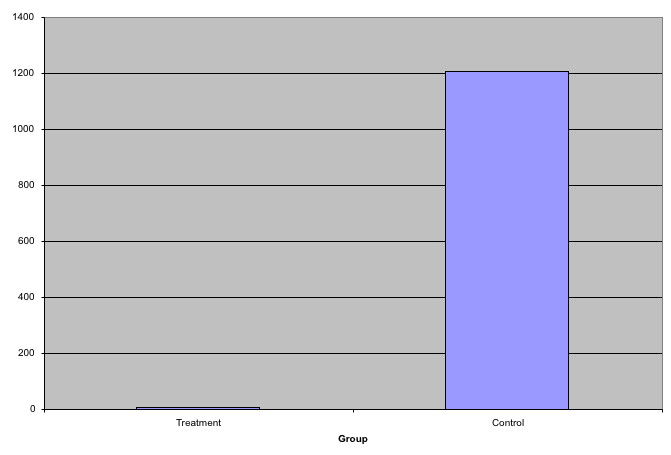

This problem is magnified when our data has outliers or very large tails. Note that from this plot there appears to be very large and consistent difference between the two groups:

A quick look at the data demonstrates that this difference is mostly driven by just two points. A version showing the data in the log-scale is much more informative.

df_fig3 <- read.csv("data/bromanfig3.csv")

library(rafalib)

mypar(1, 2)

dat <- list(

Treatment = df_fig3$x,

Control = df_fig3$y

)

dat %>%

boxplot(

xlab = "Group",

ylab = "Response",

xlab = "Group",

ylab = "Response",

cex = 0

)

#> Warning in (function (z, notch = FALSE, width = NULL, varwidth = FALSE, :

#> Duplicated arguments xlab = "Group", ylab = "Response" are disregarded

dat %>%

stripchart(

vertical = TRUE,

method = "jitter",

pch = 16,

add = TRUE,

col = 1

)

dat %>%

boxplot(

xlab = "Group",

ylab = "Response",

xlab = "Group",

ylab = "Response",

log = "y",

cex = 0

)

#> Warning in (function (z, notch = FALSE, width = NULL, varwidth = FALSE, :

#> Duplicated arguments xlab = "Group", ylab = "Response" are disregarded

dat %>%

stripchart(

vertical = TRUE,

method = "jitter",

pch = 16,

add = TRUE,

col = 1

)

40.1.3 Show the scatterplot

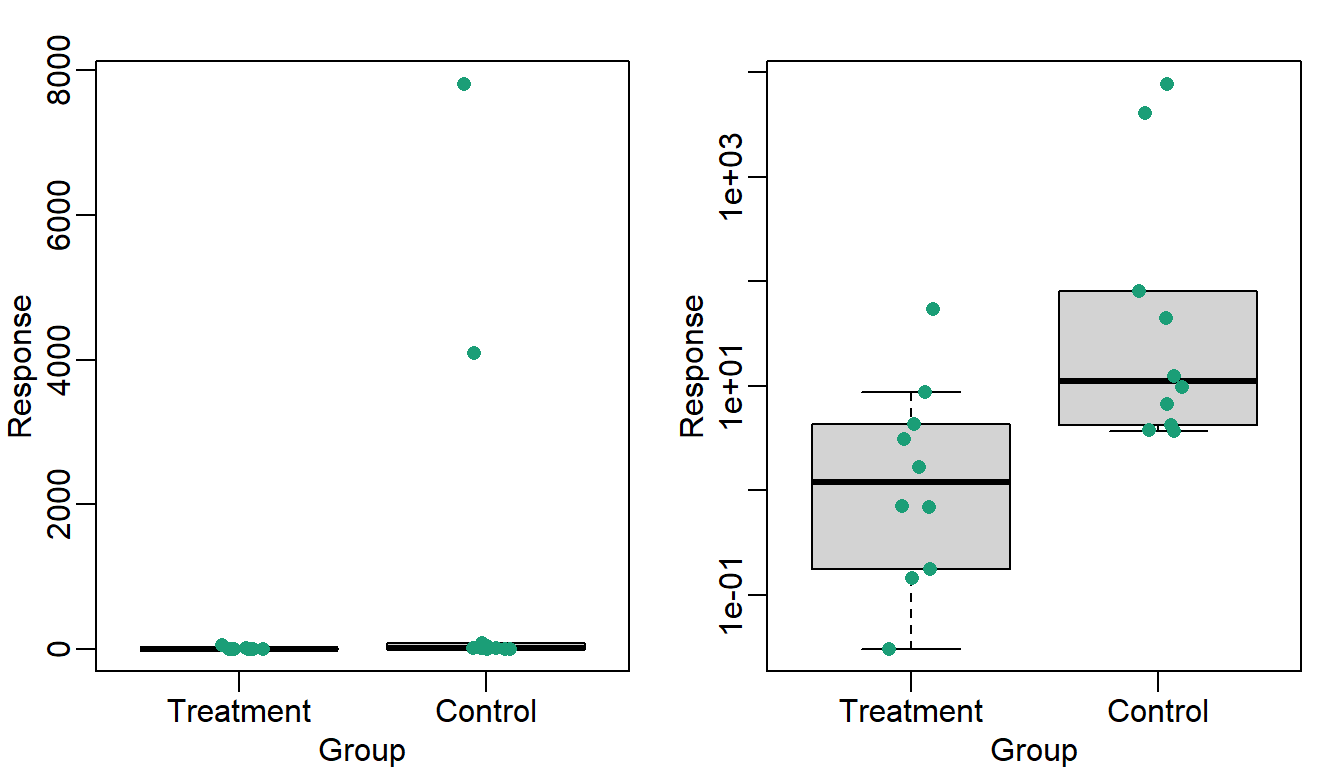

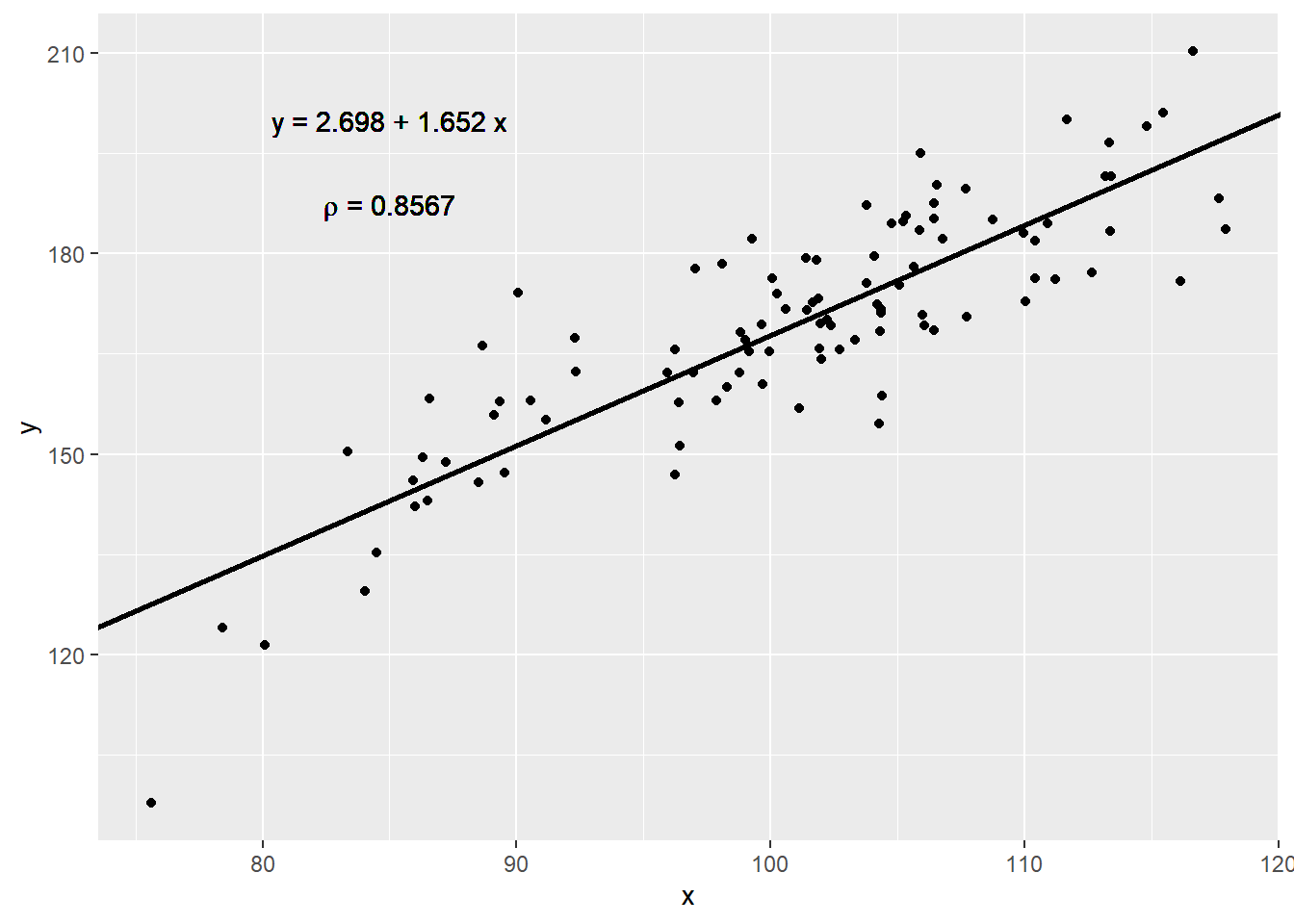

The purpose of many statistical analyses is to determine relationships between two variables. Sample correlations are typically reported and sometimes plots are displayed to show this. However, showing just the regression line is one way to display your data baldy as it hides the scatter. Surprisingly plots such as the following are commonly seen:

df_fig4 <- read.csv("data/bromanfig4.csv")

fit <- lm(y ~ x,

data = df_fig4

)

rho <- (round(

cor(

df_fig4$x,

df_fig4$y

),

4

))

ggplot(

data = df_fig4,

aes(x = x, y = y)

) +

geom_point(alpha = 0) +

geom_abline(

intercept = fit$coef[1],

slope = fit$coef[2], lwd = 1

) +

geom_text(

x = 85, y = 200,

label = paste(

"y =", round(fit$coef[1], 3),

"+", round(fit$coef[2], 3), "x"

)

) +

geom_text(

x = 85, y = 187,

label = expression(paste(rho, " = 0.8567"))

)

Showing the data is much more informative:

ggplot(data = df_fig4, aes(x = x, y = y)) +

geom_point() +

geom_abline(

intercept = fit$coef[1],

slope = fit$coef[2], lwd = 1

) +

geom_text(

x = 85, y = 200,

label = paste(

"y =", round(fit$coef[1], 3),

"+", round(fit$coef[2], 3), "x"

)

) +

geom_text(

x = 85, y = 187,

label = expression(paste(rho, " = 0.8567"))

)

40.2 High correlation does not imply replication

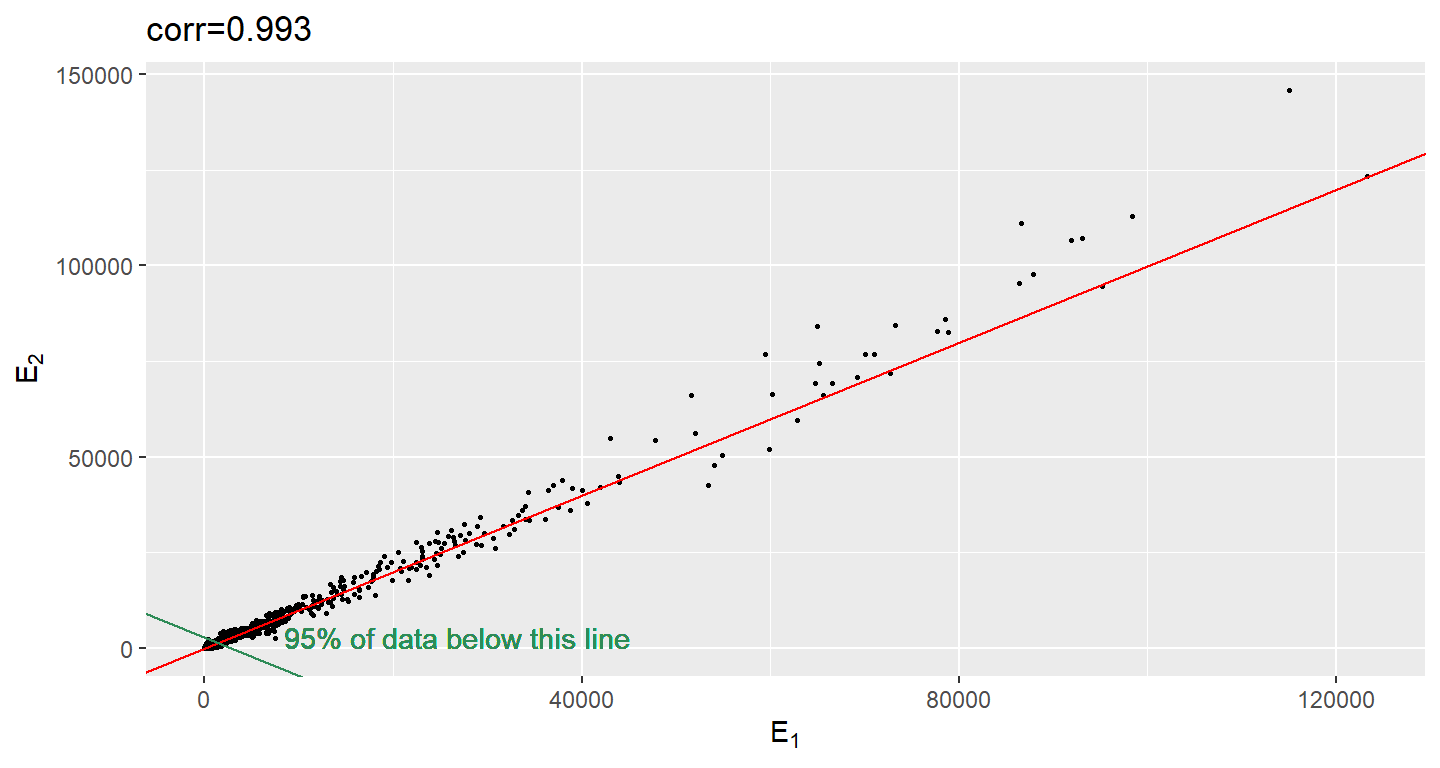

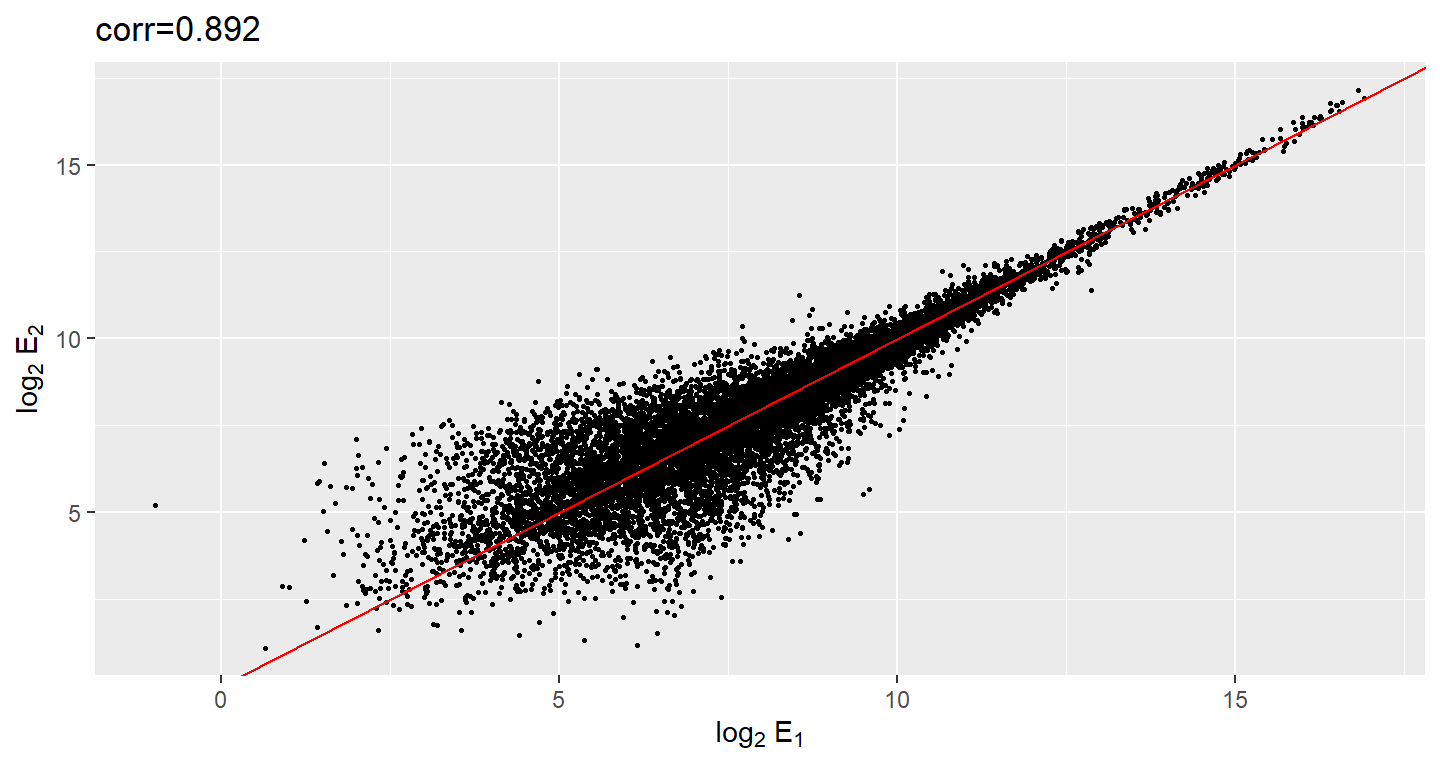

When new technologies or laboratory techniques are introduced, we are often shown scatter plots and correlations from replicated samples. High correlations are used to demonstrate that the new technique is reproducible. But correlation can be very misleading. Below is a scatter plot showing data from replicated samples run on a high throughput technology. This technology outputs 12,626 simultaneously measurements.

In the plot on the left we see the original data which shows very high correlation. But the data follows a distribution with very fat tails. Note that 95% of the data is below the green line. The plot on the right is in the log scale.

# if (!requireNamespace("BiocManager", quietly = TRUE)) install.packages("BiocManager")

# BiocManager::install("Biobase")

# BiocManager::install("SpikeInSubset")

library(Biobase)

library(SpikeInSubset)data(mas95)

mypar(1, 2)

df_mas95 <- data.frame(

r = exprs(mas95)[, 1], ## original measures were not logged

g = exprs(mas95)[, 2]

)

f <- function(a,

x,

y,

p = 0.95) {

mean(x <= a & y <= a) - p

}

a95 <- uniroot(

f,

lower = 2000,

upper = 20000,

x = df_mas95$r,

y = df_mas95$g

)$root

string_cor <- signif(cor(df_mas95$r, df_mas95$g), 3)

ggplot(

data = df_mas95,

aes(x = r, y = g)

) +

geom_point(color = "black", size = 0.5) +

geom_abline(slope = 1, intercept = 0, color = "red") +

xlab(expression(paste(E[1]))) +

ylab(expression(paste(E[2]))) +

ggtitle(paste0("corr=", string_cor)) +

geom_abline(slope = -1, intercept = a95, color = "seagreen") +

geom_text(

x = 8500, y = 0,

label = "95% of data below this line", vjust = "inward", hjust = "inward",

color = "seagreen"

)

df_mas95 <- df_mas95 %>%

mutate(

r = log2(r),

g = log2(g)

)

string_cor <- signif(cor(df_mas95$r, df_mas95$g), 3)

ggplot(

data = df_mas95,

aes(x = r, y = g)

) +

geom_point(color = "black", size = 0.5) +

geom_abline(slope = 1, intercept = 0, color = "red") +

xlab(expression(paste(log[2], " ", E[1]))) +

ylab(expression(paste(log[2], " ", E[2]))) +

ggtitle(paste0("corr=", string_cor))

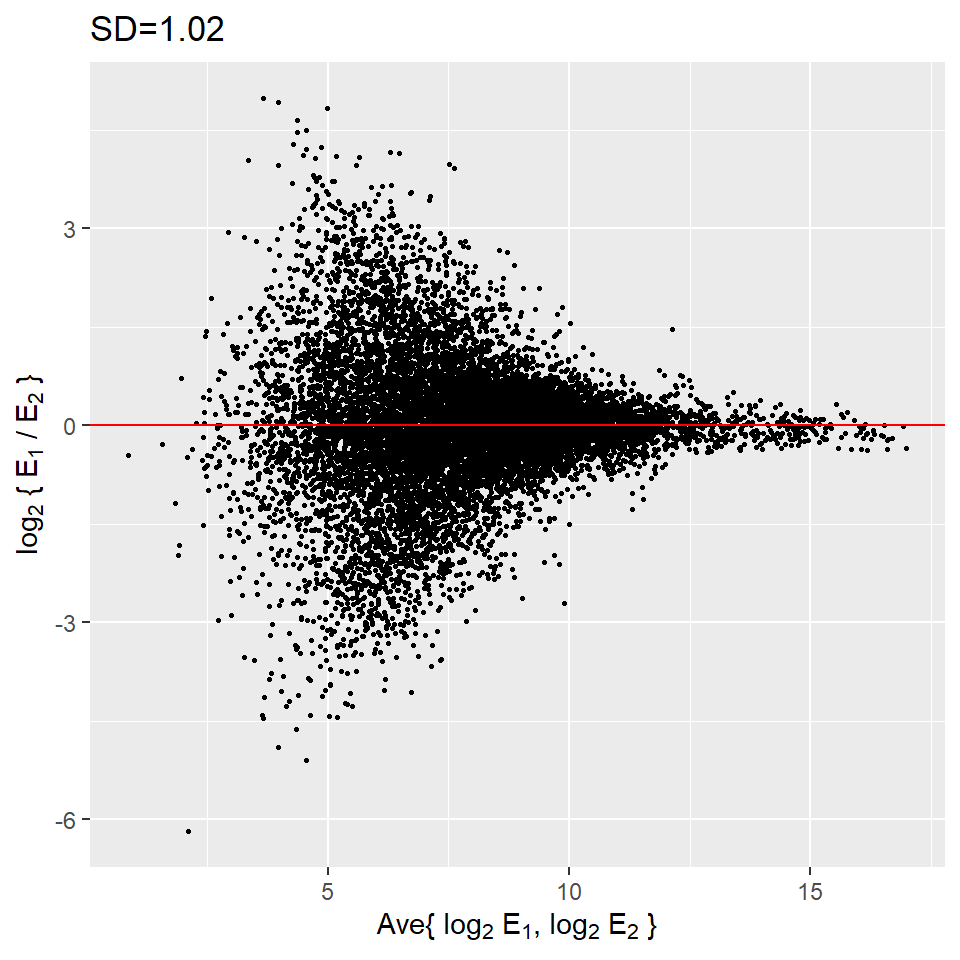

Although the correlation is reduced in the log-scale, it is still close to 1. Does this mean these data are reproduced? To examine how well the second vector reproduces the first, we need to study the differences. Therefore, we should instead plot the difference (in the log scale) versus the average:

df_mas95 <- df_mas95 %>%

mutate(

x = (r + g) / 2,

y = (r - g)

)

string_sd <- signif(sqrt(mean((df_mas95$r - df_mas95$g)^2)), 3)

ggplot(data = df_mas95, aes(x = x, y = y)) +

geom_point(size = .5, color = "black") +

geom_hline(yintercept = 0, color = "red") +

xlab(expression(paste("Ave{ ", log[2], " ", E[1], ", ", log[2], " ", E[2], " }"))) +

ylab(expression(paste(log[2], " { ", E[1], " / ", E[2], " }"))) +

ggtitle(paste0("SD=", string_sd))

These are referred to as Bland-Altman plots or MA plots in the genomics literature and will say more later. In this plot we see that the typical difference in the log (base 2) scale between two replicated measures is about 1. This means that when measurements should be the same we will, on average, observe 2 fold difference. We can now compare this variability to the differences we want to detect and decide if this technology is precise enough for our purposes.

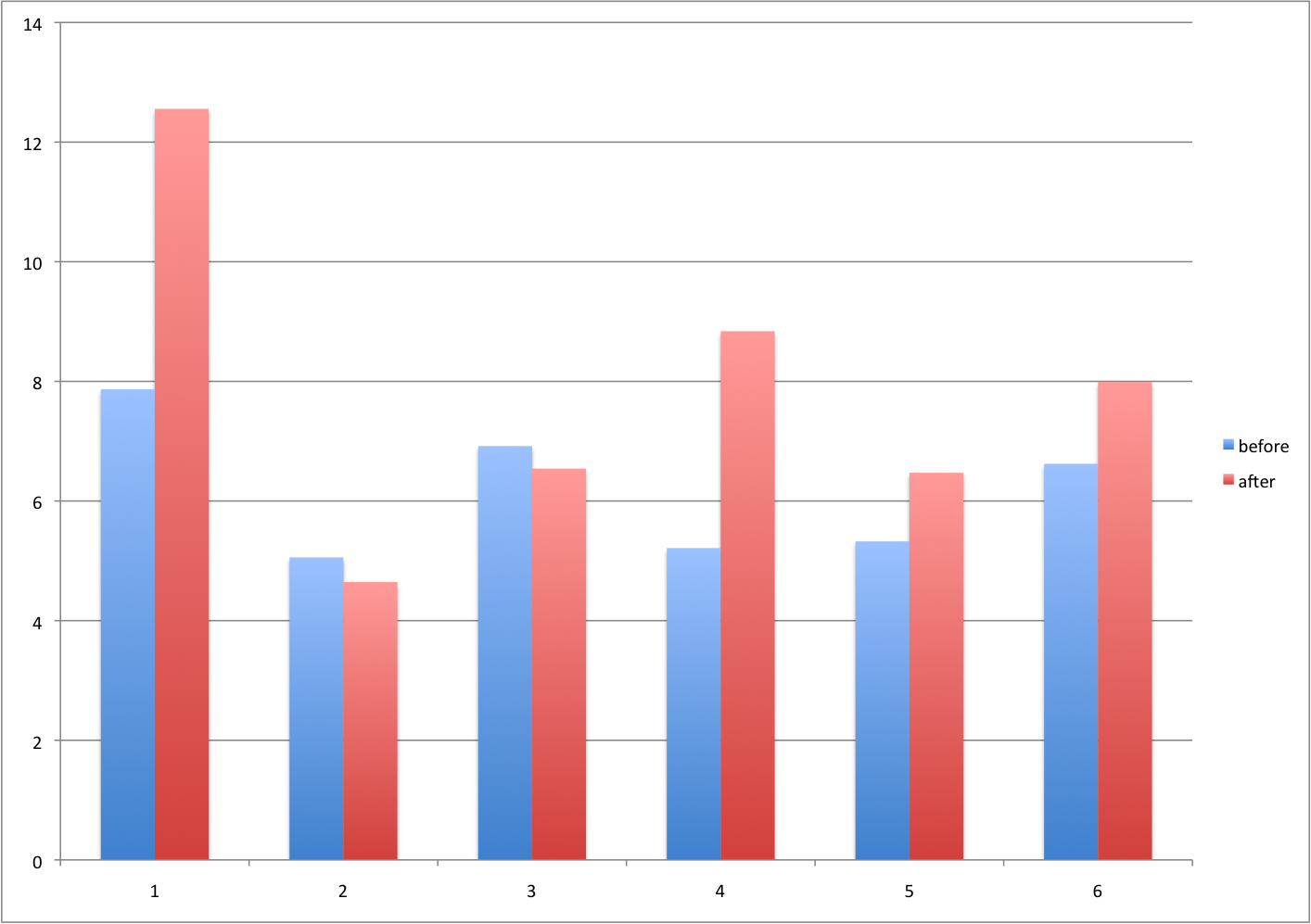

40.3 Barplots for paired data

A common task in data analysis is the comparison of two groups. When the dataset is small and data are paired, for example outcomes before and after a treatment, an unfortunate display that is used is the barplot with two colors:

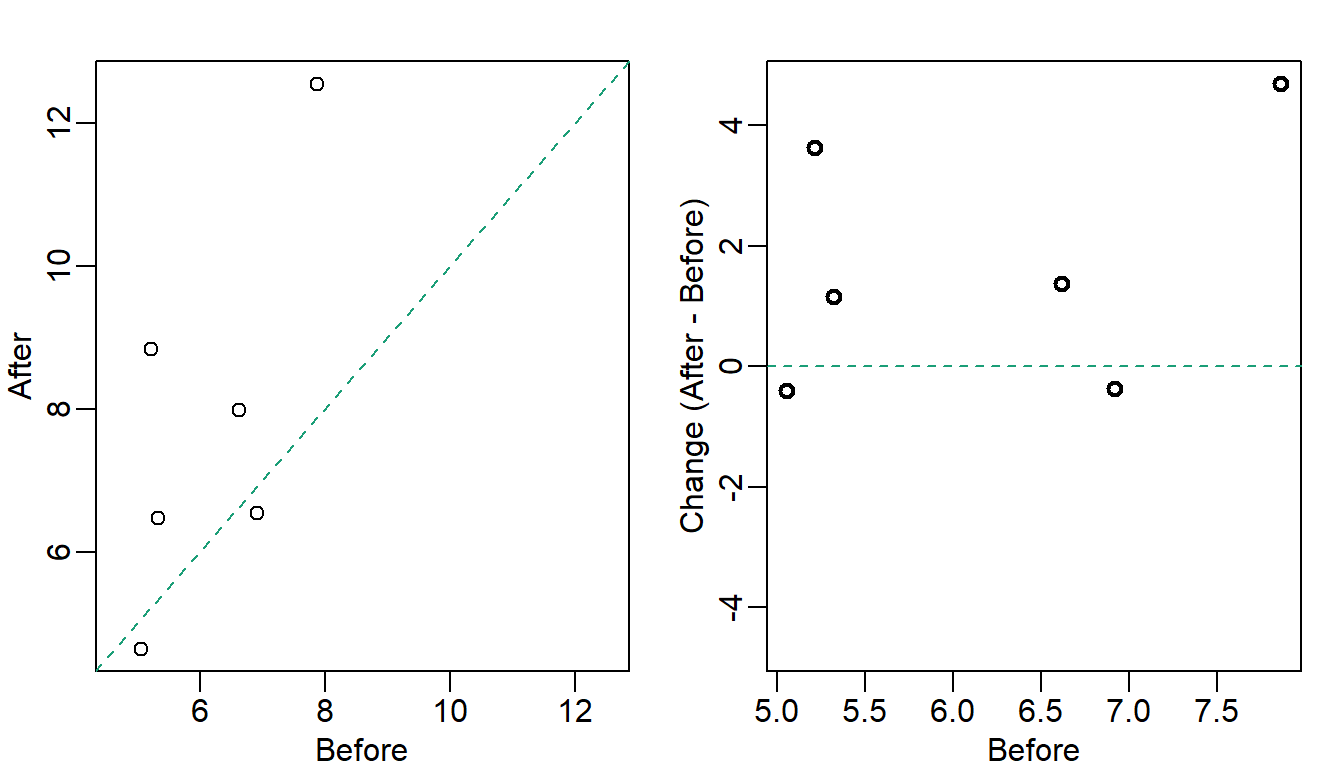

There are various better ways of showing these data to illustrate there is an increase after treatment. One is to simply make a scatterplot and which shows that most points are above the identity line. Another alternative is plot the differences against the before values.

set.seed(12201970)

before <- runif(6, 5, 8)

after <- rnorm(6, before * 1.05, 2)

li <- range(c(before, after))

ymx <- max(abs(after - before))

mypar(1, 2)

plot(

before,

after,

xlab = "Before",

ylab = "After",

ylim = li,

xlim = li

)

abline(0, 1, lty = 2, col = 1)

plot(

before,

after - before,

xlab = "Before",

ylim = c(-ymx, ymx),

ylab = "Change (After - Before)",

lwd = 2

)

abline(h = 0, lty = 2, col = 1)

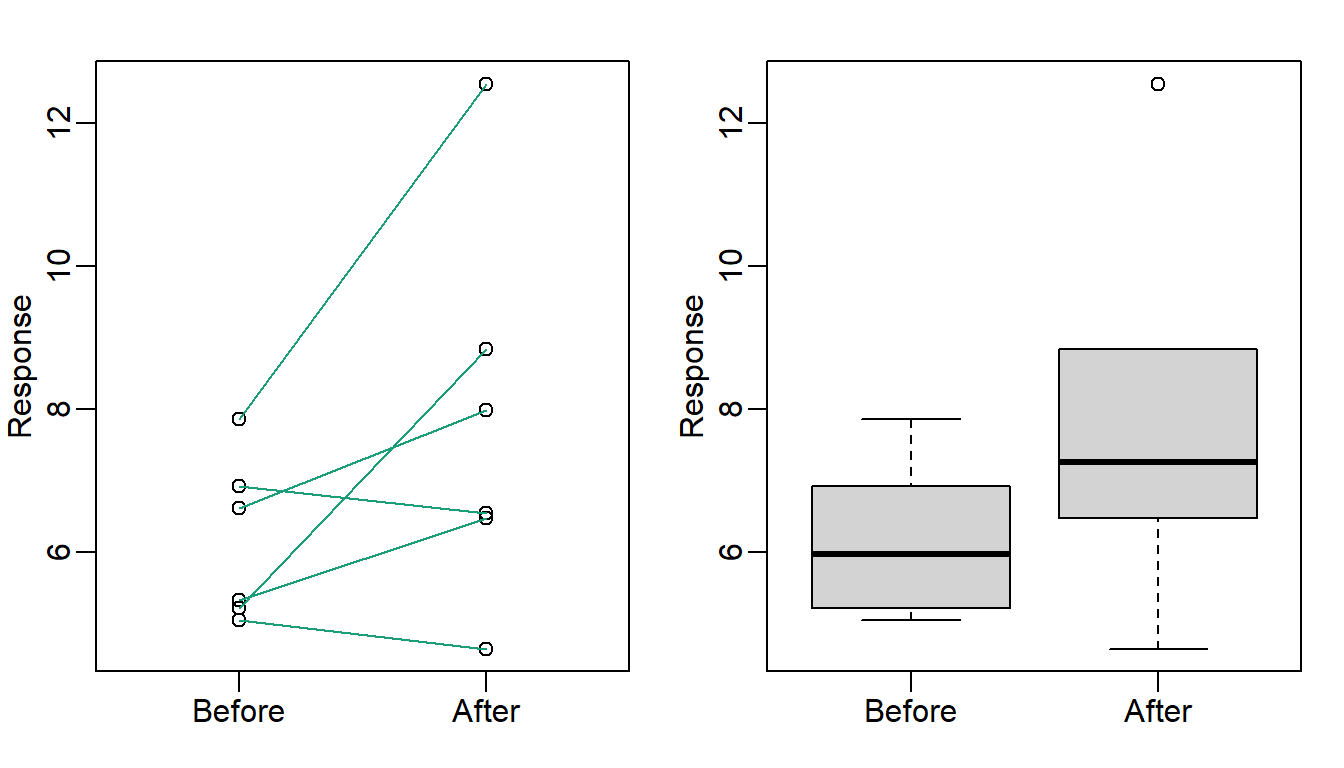

Line plots are not a bad choice, although I find them harder to follow than the previous two. Boxplots show you the increase, but lose the paired information.

z <- rep(c(0, 1), rep(6, 2))

mypar(1, 2)

plot(

z,

c(before, after),

xaxt = "n",

ylab = "Response",

xlab = "",

xlim = c(-0.5, 1.5)

)

axis(side = 1, at = c(0, 1), c("Before", "After"))

segments(rep(0, 6), before, rep(1, 6), after, col = 1)

boxplot(before,

after,

names = c("Before", "After"),

ylab = "Response"

)

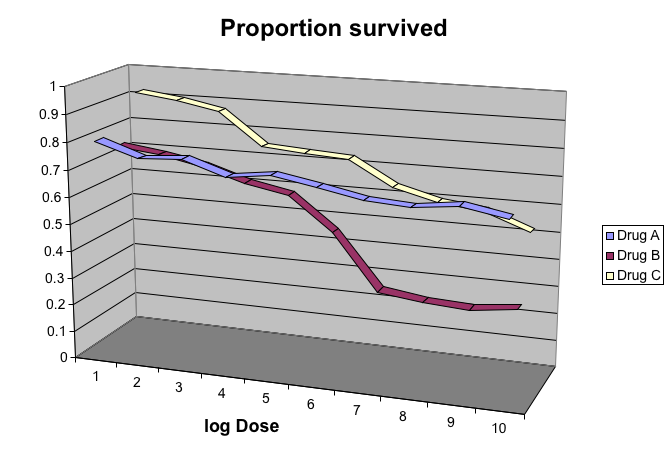

40.4 Gratuitous 3D

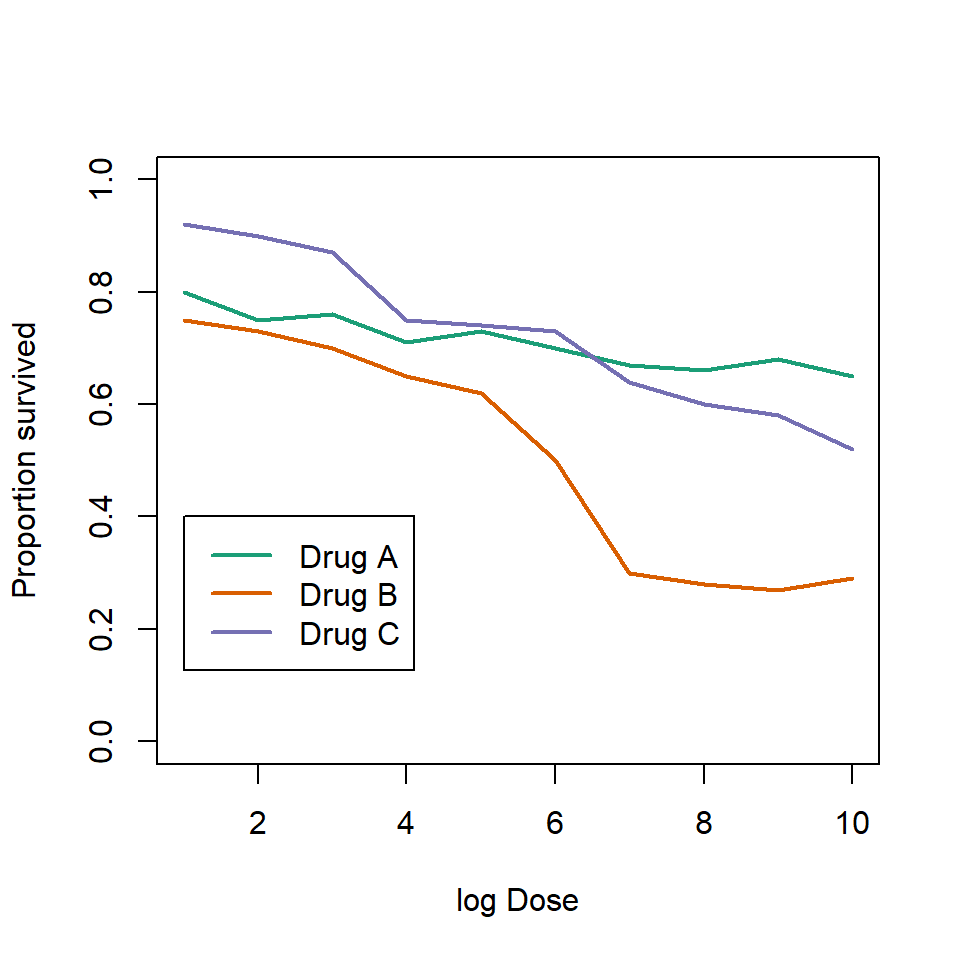

The follow figure shows three curves. Pseudo 3D is used but it is not clear way. Maybe to separate the three curves? Note how difficult it is to determine the values of the curves at any given point:

This plot can be made better by simply using color to distinguish the three lines:

# download("https://github.com/kbroman/Talk_Graphs/raw/master/R/fig8dat.csv",tmpfile)

x <- read.table("data//fig8dat.csv", sep = ",", header = TRUE)

plot(

x[, 1],

x[, 2],

xlab = "log Dose",

ylab = "Proportion survived",

ylim = c(0, 1),

type = "l",

lwd = 2,

col = 1

)

lines(x[, 1], x[, 3], lwd = 2, col = 2)

lines(x[, 1], x[, 4], lwd = 2, col = 3)

legend(1,

0.4,

c("Drug A", "Drug B", "Drug C"),

lwd = 2,

col = 1:3

)

40.5 Ignoring important factors

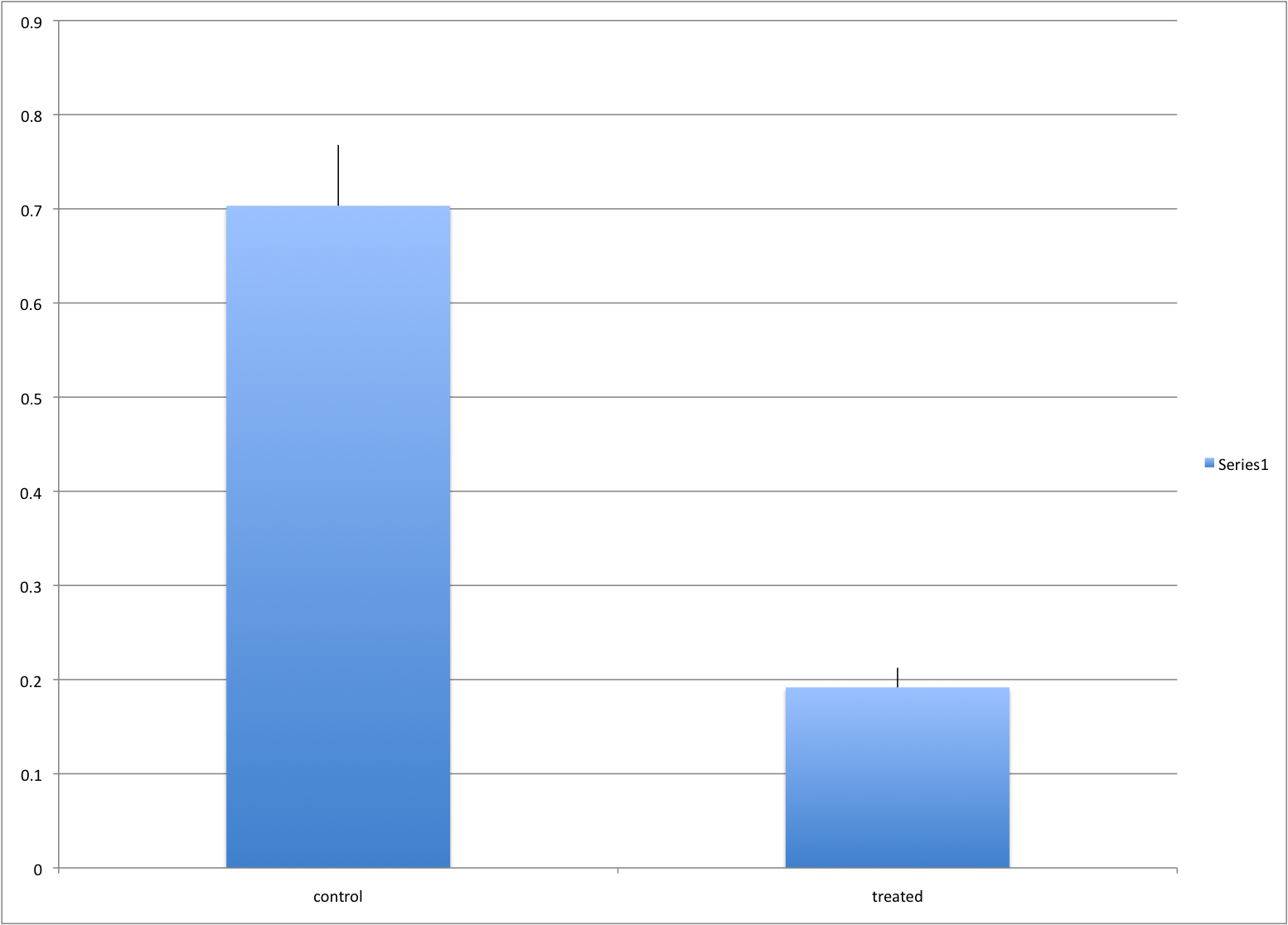

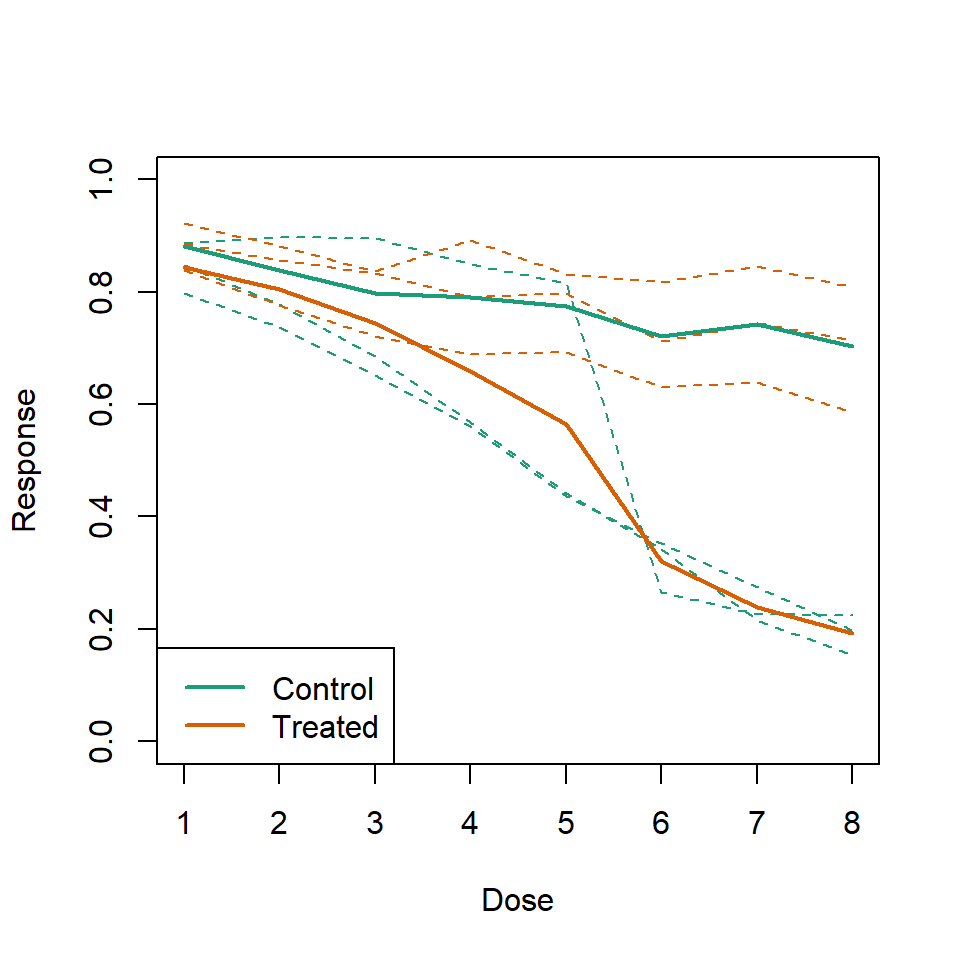

In this example we generate data with a simulation. We are studying a dose response relationship between two groups treatment and control. We have three groups of measurements for both control and treatment. Comparing treatment and control using the common barplot:

Instead we should show each curve. We can use color to distinguish treatment and control and dashed and solid lines to distinguish the original data from the mean of the three groups.

plot(

x,

y1,

ylim = c(0, 1),

type = "n",

xlab = "Dose",

ylab = "Response"

)

for (i in 1:3) {

lines(x,

z[, i],

col = 1,

lwd = 1,

lty = 2

)

}

for (i in 1:3) {

lines(x,

y[, i],

col = 2,

lwd = 1,

lty = 2

)

}

lines(x, ym, col = 1, lwd = 2)

lines(x, zm, col = 2, lwd = 2)

legend("bottomleft",

lwd = 2,

col = c(1, 2),

c("Control", "Treated")

)

40.6 Too many significant digits

By default, statistical software like R return many significant digits. This does not mean we should report them. Cutting and pasting directly from R is a bad idea as you might end up showing a table like this for, say, heights of basketball players:

heights <- cbind(

rnorm(8, 73, 3),

rnorm(8, 73, 3),

rnorm(8, 80, 3),

rnorm(8, 78, 3),

rnorm(8, 78, 3)

)

colnames(heights) <- c("SG", "PG", "C", "PF", "SF")

rownames(heights) <- paste("team", 1:8)

heights

#> SG PG C PF SF

#> team 1 76.4 76.2 81.7 75.3 77.2

#> team 2 74.1 71.1 80.3 81.6 73.0

#> team 3 71.5 69.0 85.8 80.1 72.8

#> team 4 78.7 72.8 81.3 76.3 82.9

#> team 5 73.4 73.3 79.2 79.7 80.3

#> team 6 72.9 71.8 77.4 81.7 80.4

#> team 7 68.4 73.0 79.1 71.2 77.2

#> team 8 73.8 75.6 83.0 75.6 87.7Note we are reporting precision up to 0.00001 inches. Do you know of a tape measure with that much precision? This can be easily remedied:

round(heights, 1)

#> SG PG C PF SF

#> team 1 76.4 76.2 81.7 75.3 77.2

#> team 2 74.1 71.1 80.3 81.6 73.0

#> team 3 71.5 69.0 85.8 80.1 72.8

#> team 4 78.7 72.8 81.3 76.3 82.9

#> team 5 73.4 73.3 79.2 79.7 80.3

#> team 6 72.9 71.8 77.4 81.7 80.4

#> team 7 68.4 73.0 79.1 71.2 77.2

#> team 8 73.8 75.6 83.0 75.6 87.740.7 Displaying data well

In general you should follow these principles:

- Be accurate and clear.

- Let the data speak.

- Show as much information as possible, taking care not to obscure the message.

- Science not sales: avoid unnecessary frills (esp. gratuitous 3d).

- In tables, every digit should be meaningful. Don’t drop ending 0s.

40.8 Some further reading

ER Tufte (1983) The visual display of quantitative information. Graphics Press.

ER Tufte (1990) Envisioning information. Graphics Press.

ER Tufte (1997) Visual explanations. Graphics Press.

WS Cleveland (1993) Visualizing data. Hobart Press.

WS Cleveland (1994) The elements of graphing data. CRC Press.

A Gelman, C Pasarica, R Dodhia (2002) Let’s practice what we preach: Turning tables into graphs. The American Statistician 56:121-130

NB Robbins (2004) Creating more effective graphs. Wiley